HOWTO

Our HOWTO collection contains short tutorials that help you step through some of the common (but potentially confusing) tasks users may need to accomplish, that do not quite rise to the level of requiring more structured training materials. Items here may explain a procedure to follow, or present a "best practices" formula that we think may be helpful.

Using Software on Pitzer RHEL 7

While OSC has upgraded the Pitzer cluster to RHEL 9, you may encounter difficulties when migrating jobs from RHEL 7 to the new system. To help you continue your research, we provide a containerized RHEL 7 environment on Pitzer RHEL 9. This container replicates the original RHEL 7 system and software environment used on Pitzer.

Note: This containerized RHEL7 environment is a temporary solution and may be terminated at any time without prior notice.

Reusing Job Scripts

Assume you have an existing job script that previously worked on Pitzer RHEL 7 (e.g., my_rhel7_job.sh):

#!/bin/bash #SBATCH --nodes=1 #SBATCH --ntasks-per-node=4 my_rhel7_program

To run this script within the RHEL 7 container on Pitzer RHEL 9, prepare a new job script that uses the container wrapper, such as my_rhel7_job_in_container.sh:

#!/bin/bash #SBATCH --nodes=1 #SBATCH --ntasks-per-node=4 /apps/share/tools/rhel7_wrapper.sh ./my_rhel7_job.sh

Then submit the job after making my_rhel7_job.sh an executable

chmod +x my_rhel7_job.sh sbatch my_rhel7_job_in_container.sh

Running a MPI program

We have disabled Slurm support inside the container due to certain technical issues. Therefore, any Slurm-specific commands in your job script (such as srun or sbcast) will not work. You should replace them with alternatives such as mpirun/mpiexec and cp, respectively.

Please note that MVAPICH2 is built only with Slurm support, so there is no native mpirun/mpiexec command available for it inside the container. Instead, you can use Intel-MPI or OpenMPI, which provide their own mpiexec commands.

Below are example replacements for srun:

# OpenMPI mpiexec --bind-to none <your_program> # Intel-MPI mpiexec -launcher ssh <your_program>

GPU support and extra bind path

If your job requires GPUs, append the --nv option:

/apps/share/tools/rhel7_wrapper.sh --nv ./my_rhel7_job.sh

By default, you can access your own home directory. If you need access to another user's home directory that has been shared with you, use the --bind option:

/apps/share/tools/rhel7_wrapper.sh --bind /someone/else/home ./my_rhel7_job.sh

Working Interactively in the RHEL 7 Environment

In some cases, you may need to recompile your program within the RHEL 7 environment. You can either use the job script mentioned above or launch an interactive container shell session after starting an interactive job:

/apps/share/tools/rhel7_shell.sh

Once inside the container shell, initialize the RHEL 7 environment by running:

source /etc/profile.d/lmod.sh source /etc/profile.d/z00_StdEnv.sh module rm xalt

Or, as a shortcut:

. /apps/share/tools/init_rhel7.sh

You can verify that the RHEL 7 environment is properly set up by running:

module list

The options --nv and --bind are also available in the rhel7_shell.sh script.

HOW TO: Look at requested time accuracy using XDMoD

The XDMoD tool at xdmod.osc.edu can be used to get an overview of how accurate the requested time of jobs are with the elapsed time of jobs.

To request an amount of time for a job, you can use the following header.

#SBATCH --time=xx:xx:xx

The elapsed time is how long the job ran for before completing. This can be obtained for completed jobs using the sacct command.

$ sacct -u <username> --format=jobid,account,elapsed

It is important to understand that the requested time is used when scheduling a submitted job. A longer requested time will also increase the wait time, as it will take longer to reserve the requested resources for that amount of time. This makes getting the requested job time as close to the expected elapsed time important for minimizing wait times.

The above method of checking elapsed time allows one to view the requested time accuracy for an individual job, but XDMoD can be used to do this for jobs submitted over a specified period of time.

First, login to xdmod.osc.edu. (See this page for more instructions.)

https://www.osc.edu/supercomputing/knowledge-base/xdmod_tool

Then, navigate to the Metric Explorer tab.

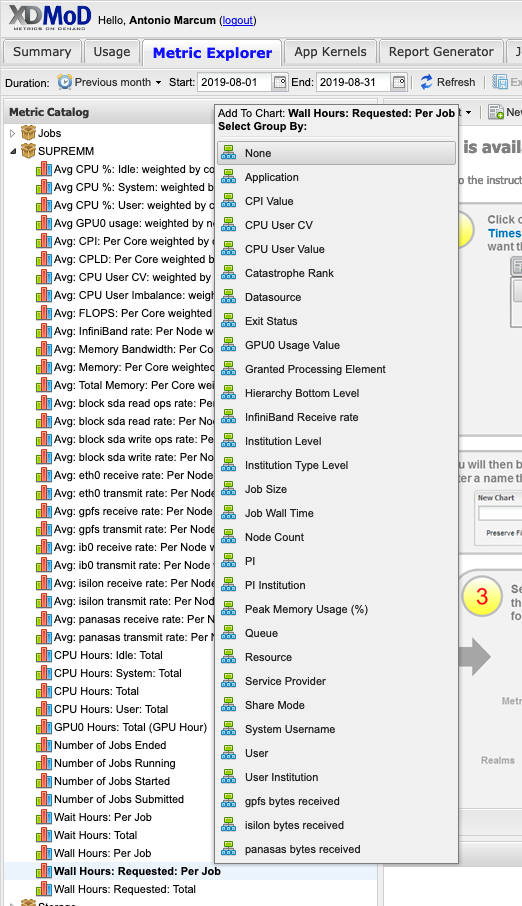

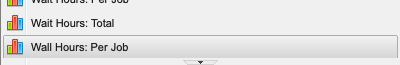

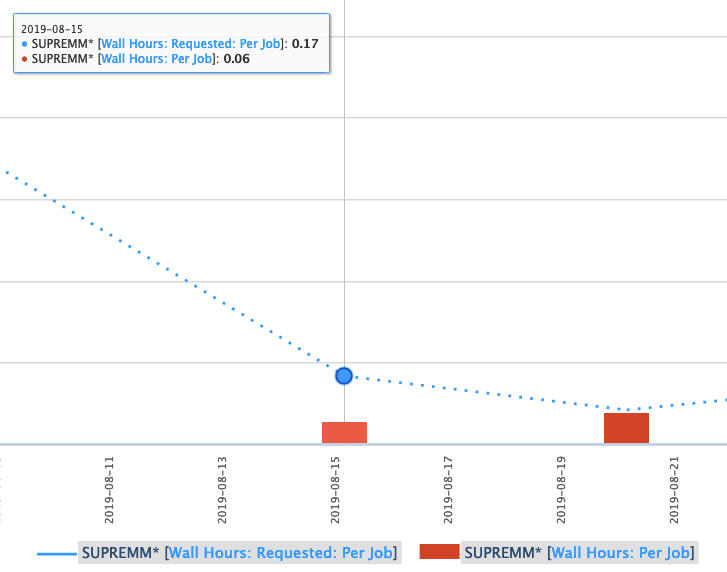

Look for the Metric Catalog on the left side of the page and expand the SUPREMM options. Select Wall Hours: Requested: Per Job and group by None.

This will now show the average time requested.

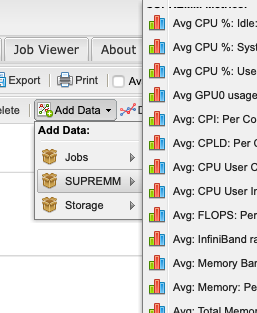

The actual time data can be added by navigating to Add Data -> SUPREMM -> Wall Hours: Per Job.

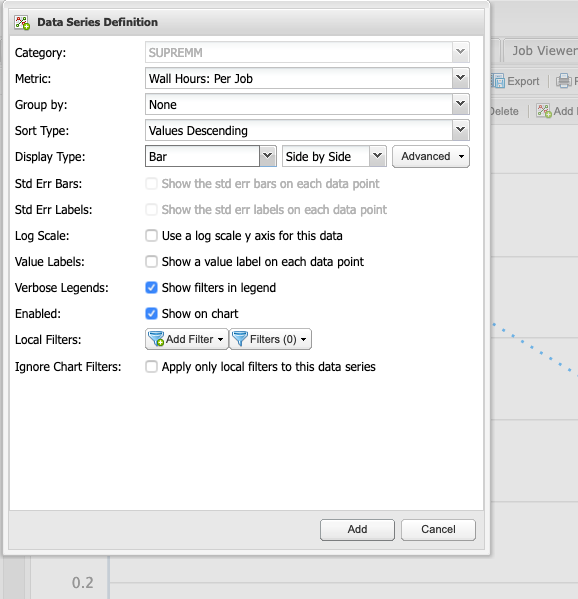

This will open a new window titled Data Series Definition, to change some parameters before showing the new data. In order to easily distinguish between elapsed and requested time, change the Display Type to Bar, then click add to view the new data.

Now there is a line showing the average time requested by submitted jobs, and bars depicting the average time that elapsed to complete those jobs. Essentialy, the closer the bar is to the line, without intersecting the line, the more accurate the time predicition. If the bar intersects the line, then it may indicate the there was not enough time requested for a job to complete, but remember that these values are averages.

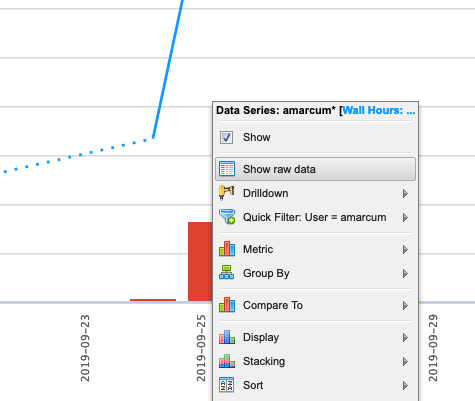

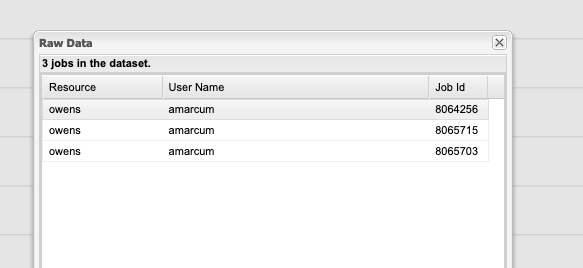

One can also view more detailed information about these jobs by clicking a data point and using the Show raw data option.

HOWTO: Add and Use DUO MFA

Options:

- Duo Mobile: You can install Duo Mobile on your smartphone or tablet. It will give you two ways to authenticate either through DUO push notification or DUO mobile passcode

- Text Message Passcode: You can register your phone and request a passcode via text message. It will send you a single use passcode via text message

- Phone Call: You can register your phone and request a phone call. It will call you and provide a single use passcode

- Security keys: You can purchase security keys such as a YubiKey to access web applications.

- If you need for SSH connection, please purchase YubiKeys that support "OATH - HOTP" and email OSC Help to schedule a meeting and get your security key enrolled in OSC's Duo instance. See this Use YubiKeys with Duo page for additional information.

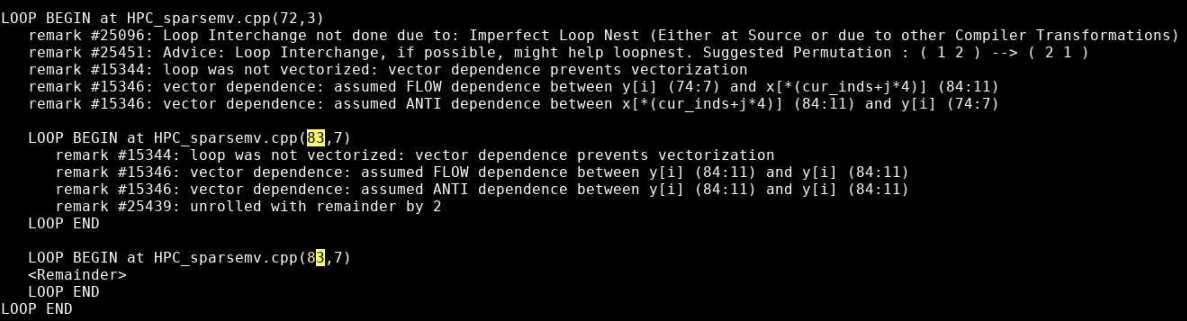

HOWTO: Collect performance data for your program

This page outlines ways to generate and view performance data for your program using tools available at OSC.

Intel Tools

This section describes how to use performance tools from Intel. Make sure that you have an Intel module loaded to use these tools.

Intel VTune

Intel VTune is a tool to generate profile data for your application. Generating profile data with Intel VTune typically involves three steps:

1. Prepare the executable for profiling.

You need executables with debugging information to view source code line detail: re-compile your code with a -g option added among the other appropriate compiler options. For example:

mpicc wave.c -o wave -g -O3

2. Run your code to produce the profile data.

Profiles are normally generated in a batch job. To generate a VTune profile for an MPI program:

mpiexec <mpi args> amplxe-cl <vtune args> <program> <program args>

where <mpi args> represents arguments to be passed to mpiexec, <program> is the executable to be run, <vtune args> represents arguments to be passed to the VTune executable amplxe-cl, and <program args> represents arguments passed to your program.

For example, if you normally run your program with mpiexec -n 12 wave_c, you would use

mpiexec -n 12 amplxe-cl -collect hotspots -result-dir r001hs wave_c

To profile a non-MPI program:

amplxe-cl <vtune args> <program> <program args>

The profile data is saved in a .map file in your current directory.

As a result of this step, a subdirectory that contains the profile data files is created in your current directory. The subdirectory name is based on the -result-dir argument and the node id, for example, r001hs.o0674.ten.osc.edu.

3. Analyze your profile data.

You can open the profile data using the VTune GUI in interactive mode. For example:

amplxe-gui r001hs.o0674.ten.osc.edu

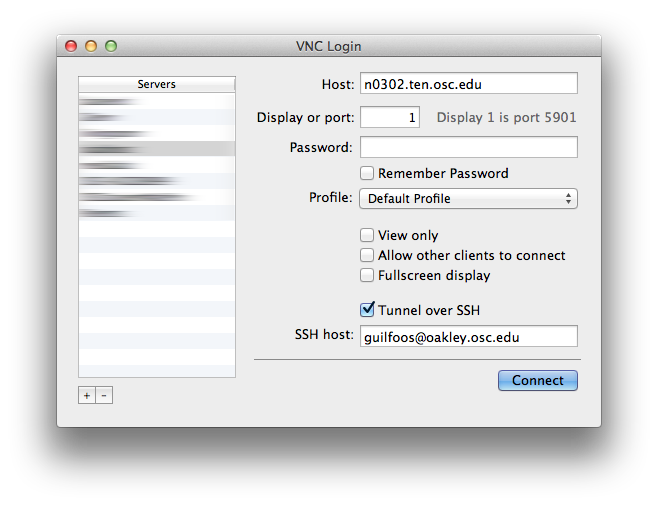

One should use an OnDemand VDI (Virtual Desktop Interface) or have X11 forwarding enabled (see Setting up X Windows). Note that X11 forwarding can be distractingly slow for interactive applications.

Intel APS

Intel's Application Performance Snapshot (APS) is a tool that provides a summary of your application's performance . Profiling HPC software with Intel APS typically involves four steps:

1. Prepare the executable for profiling.

Regular executables can be profiled with Intel APS. but source code line detail will not be available. You need executables with debugging information to view source code line detail: re-compile your code with a -g option added among the other approriate compiler options. For example:

mpicc wave.c -o wave -tcollect -O3

2. Run your code to produce the profile data directory.

Profiles are normally generated in a batch job. To generate profile data for an MPI program:

mpiexec -trace <mpi args> <program> <program args>

where <mpi args> represents arguments to be passed to mpiexec, <program> is the executable to be run and <program args> represents arguments passed to your program.

For example, if you normally run your program with mpiexec -n 12 wave_c, you would use

mpiexec -n 12 wave_c

To profile a non-MPI program:

aps <program> <program args>

The profile data is saved in a subdirectory in your current directory. The directory name is based on the date and time, for example, aps_result_YYYYMMDD/.

3. Generate the profile file from the directory.

To generate the html profile file from the result subdirectory:

aps --report=./aps_result_YYYYMMDD

to create the file aps_report_YYYYMMDD_HHMMSS.html.

4. Analyze the profile data file.

You can open the profile data file using a web browswer on your local desktop computer. This option typically offers the best performance.

Linaro Tools

This section describes how to use performance tools from ARM.

Linaro MAP

Instructions for how to use MAP is available here.

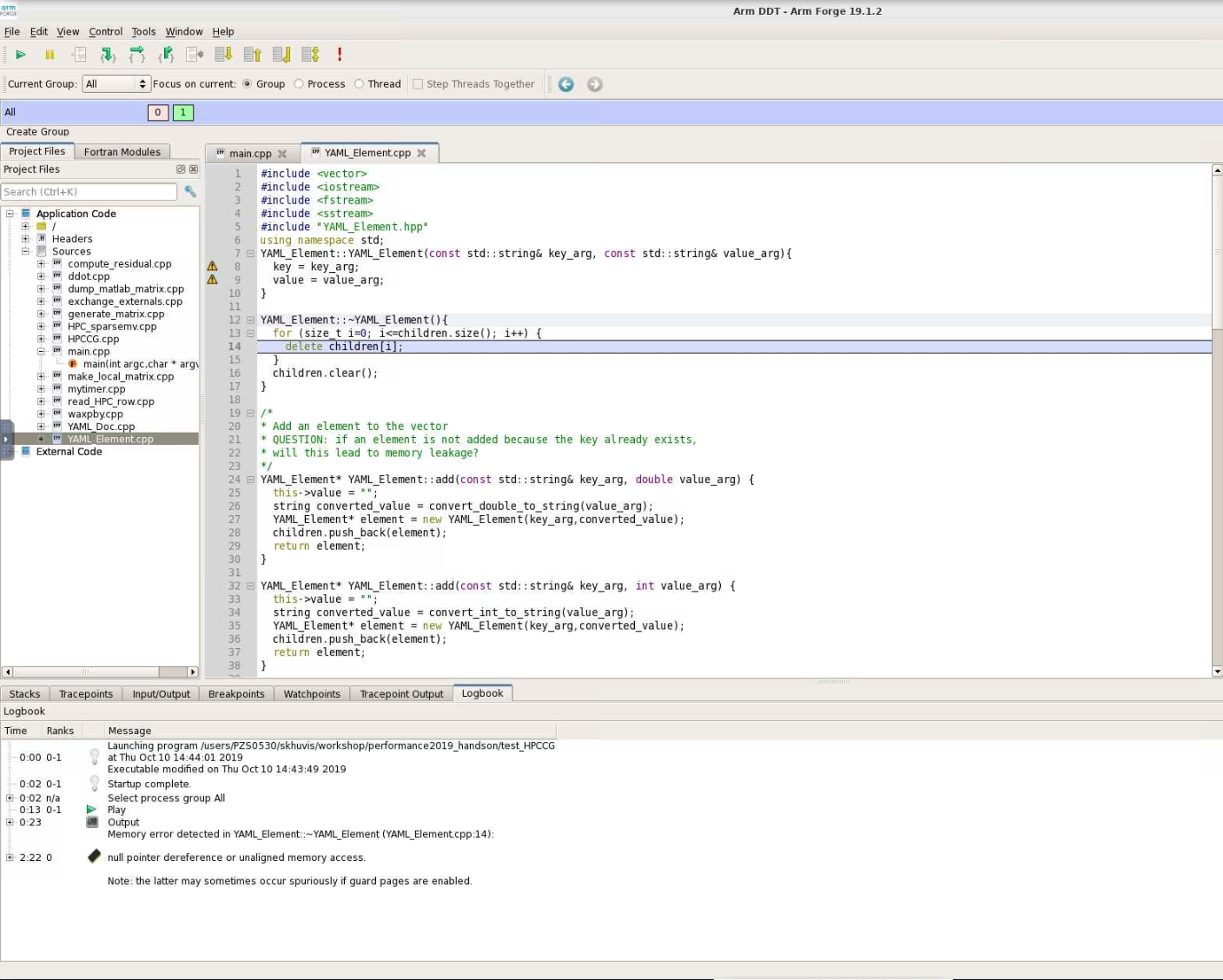

Linaro DDT

Instructions for how to use DDT is available here.

Linaro Performance Reports

Instructions for how to use Performance Reports is available here.

Other Tools

This section describes how to use other performance tools.

HPC Toolkit

Rice University's HPC Toolkit is a collection of performance tools. Instructions for how to use it at OSC is available here.

TAU Commander

TAU Commander is a user interface for University of Oregon's TAU Performance System. Instructions for how to use it at OSC is available here.

HOWTO: Create and Manage Python Environments

While our Python installations come with many popular packages installed, you may come upon a case in which you need an additional package that is not installed. If the specific package you are looking for is available from anaconda.org (formerly binstar.org), you can easily install it and required dependencies by using the conda package manager.

Procedure

The following steps are an example of how to set up a Python environment and install packages to a local directory using conda. We use the name local for the environment, but you may use any other name.

Load proper Python module

We have python and Miniconda3 modules. python and miniconda3 module is based on Conda package manager. python modules are typically recommended when you use Python in a standard environment that we provide. However, if you want to create your own python environment, we recommend using miniconda3 module, since you can start with minimal configurations.

module load miniconda3/24.1.2-py310

Configure Conda (first time use)

The first time you use conda, it is recommend to configure it to use the desired channels and options. A number of channels exist with different packages and licensing requirements. While academic users are generally unrestricted, commercial users may be subject to terms of service requiring license purchasing. Commercial users are encouraged to check with their organization regarding licensing. Please see Anaconda, Inc. Terms of Service for details.

To avoid using proprietary packages from the defaults channel, users can remove it:

conda config --remove channels defaults

and add the alternative conda-forge channel instead:

conda config --add channels conda-forge

OSC recommends setting strict channel priority:

conda config --set channel_priority strict

If strict channel priority makes required dependencies unavailable, it can be disabled:

conda config --set channel_priority flexible

Create Python installation to local directory

Three alternative create commands are listed. These cover the most common cases.

CREATE NEW ENVIRONMENT

The following will create a minimal Python installation without any extraneous packages:

conda create -n local

CLONE BASE ENVIRONMENT

If you want to clone the full base Python environment from the system, you may use the following create command:

conda create -n local --clone base

CREATE NEW ENVIRONMENT WITH SPECIFIC PACKAGES

You can augment the command above by listing specific packages you would like installed into the environment. For example, the following will create a minimal Python installation with only the specified packages (in this case, numpy and babel):

conda create -n local numpy babel

By default, conda will install the newest versions of the packages it can find. Specific versions can be specified by adding =<version> after the package name. For example, the following will create a Python installation with Python version 2.7 and NumPy version 1.16:

conda create -n local python=2.7 numpy=1.16

CREATE NEW ENVIRONMENT WITH A SPECIFIC location

By default, conda will create the environment in your home location $HOME. To specify a location where the local environment is created, for example, in the project space /fs/ess/ProjectID, you can use the following command:

conda create --prefix /fs/ess/ProjectID/local

To activate the environment, use the command:

source activate /fs/ess/ProjectID/local

To verify that a clone has been created, use the command

conda info -e

For additional conda command documentation see https://docs.conda.io/projects/conda/en/latest/commands.html#conda-general-commands

Activate environment

For the bash shell:

source activate local

At the end of the conda create step, you may saw a message from the installer that you can use conda activate command for activating environment. But, please don't use conda activate command, because it will try to update your shell configuration file and it may cause other issues. So, please use source activate command as we suggest above.

conda init to enable the conda activate command, your shell configuration file such as .bashrc would have been altered with conda-specific lines. Upon activation of your environment using source activate, you may notice that the source activate/deactivate commands cease to function. However, we will be updating miniconda3 modules by May 15th 2024 to ensure that conda activate no longer alters the .bashrc file. Consequently, you can safely remove the conda-related lines between # >>> conda initialize >>> and # <<< conda initialize <<< from your .bashrc file and continue using the conda activate command.On newer versions of Anaconda on the Pitzer cluster you may also need to perform the removal of the following packages before trying to install your specific packages:

conda remove conda-build

conda remove conda-env

Install packages

To install additional packages, use the conda install command. For example, to install the yt package:

conda install yt

By default, conda will install the newest version if the package that it can find. Specific versions can be specified by adding =<version> after the package name. For example, to install version 1.16 of the NumPy package:

conda install numpy=1.16

If you need to install packages with pip, then you can install pip in your virtual environment by

conda install pip

Then, you can install packages with pip as

pip install PACKAGE

Please make sure that you have installed pip in your environment not using one from the miniconda module. The pip from the miniconda module will give access to the packages from the module to your environment which may or may not be desired. Also set export PYTHONNOUSERSITE=True to prevent packages from user's .local path.

Test Python package

Now we will test our installed Python package by loading it in Python and checking its location to ensure we are using the correct version. For example, to test that NumPy is installed correctly, run

python -c "from __future__ import print_function; import numpy; print(numpy.__file__)"

and verify that the output generally matches

$HOME/.conda/envs/local/lib/python3.6/site-packages/numpy/__init__.py

To test installations of other packages, replace all instances of numpy with the name of the package you installed.

Remember, you will need to load the proper version of Python before you go to use your newly installed package. Packages are only installed to one version of Python.

Install your own Python packages

If the method using conda above is not working, or if you prefer, you can consider installing Python packages from the source. Please read HOWTO: install your own Python packages.

But I use virtualenv and/or pip!

See the comparison to these package management tools here:

https://docs.conda.io/projects/conda/en/latest/commands.html#conda-vs-pip-vs-virtualenv-commands

Use pip only without conda package manager

pip installations are supported:

module load python module list # check which python you just loaded pip install --user --upgrade PACKAGE # where PACKAGE is a valid package name

Note the default installation prefix is set to the system path where OSC users cannot install the package. With the option --user, the prefix is set to $HOME/.local where lib, bin, and other top-level folders for the installed packages are placed. Finally, the option --upgrade will upgrade the existing packages to the newest available version.

The one issue with this approach is portability with multiple Python modules. If you plan to stick with a single Python module, then this should not be an issue. However, if you commonly switch between different Python versions, then be aware of the potential trouble in using the same installation location for all Python versions.

Use pip in a Python virtual environment (Python 3 only)

Typically, you can install packages with the methods shown in Install packages section above, but in some cases where the conda package installations have no source from conda channels or have dependency issues, you may consider using pip in an isolated Python virtual environment.

To create an isolated virtual environment:

module reset python3 -m venv --without-pip $HOME/venv/mytest --prompt "local" source $HOME/venv/mytest/bin/activate (local) curl https://bootstrap.pypa.io/get-pip.py |python # get the newest version of pip (local) deactivate

where we use the path $HOME/venv/mytest and the name local for the environment, but you may use any other path and name.

To activate and deactivate the virtual environment:

source $HOME/venv/mytest/bin/activate (local) deactivate

To install packages:

source $HOME/venv/mytest/bin/activate (local) pip install PACKAGE

You don't need the --user option within the virtual environment.

Further Reading

Conda Test Drive: https://conda.io/docs/test-drive.html

HOWTO: Install Tensorflow locally

This documentation describes how to install tensorflow package locally in your $HOME space. For more details on Tensorflow see the software page.

Load python module

module load miniconda3/24.1.2-py310

If you need to install tensorflow versions not already provided or would like to use tensorflow in a conda environment proceed with the tutorial below.

Create Python Environment

First we will create a conda environment which we will later install tensorflow into. See HOWTO: Create and Manage Python Environments for details on how to create and setup your environemnt.

Make sure you activate your environment before proceeding:

source activate MY_ENV

Install package

Install the latest version of tensorflow.

conda install tensorflow

You can see all available version for download on conda with conda search tensorflow

There is also a gpu compatable version called tensorflow-gpu

If there are errors on this step you will need to resolve them before continuing.

Test python package

Now we will test tensorflow package by loading it in python and checking its location to ensure we are using the correct version.

python -c "import tensorflow;print (tensorflow.__file__)"

Output:

$HOME/.conda/envs/MY_ENV/lib/python3.9/site-packages/tensorflow/__init__.py

Remember, you will need to load the proper version of python before you go to use your newly installed package. Packages are only installed to one version of python.

Please refer HOWTO: Use GPU with Tensorflow and PyTorch if you would like to use tenorflow with Gpus.

HOWTO: Install Python packages from source

While we provide a number of Python packages, you may need a package we do not provide. If it is a commonly used package or one that is particularly difficult to compile, you can contact OSC Help for assistance. We also have provided an example below showing how to build and install your own Python packages and make them available inside of Python. These instructions use "bash" shell syntax, which is our default shell. If you are using something else (csh, tcsh, etc), some of the syntax may be different.

Please consider using conda Python package manager before you try to build Python using the method explained here. We have instructions on conda here.

Gather your materials

First, you need to collect what you need in order to perform the installation. We will do all of our work in $HOME/local/src. You should make this directory now.

mkdir -p $HOME/local/src

Next, we will need to download the source code for the package we want to install. In our example, we will use NumExpr. (NumExpr is already available through conda, so it is recommended you use conda to install it: tutorial here. The following steps are simply an example of the procedure you would follow to perform an installation of software unavailable in conda or pip). You can either download the file to your desktop and then upload it to OSC, or directly download it using the wget utility (if you know the URL for the file).

cd ~/local/src wget https://github.com/pydata/numexpr/releases/download/v2.8.4/numexpr-2.8.4.tar.gz

Next, extract the downloaded file. In this case, since it's a "tar.gz" format, we can use tar to decompress and extract the contents.

tar xvfz numexpr-2.8.4.tar.gz

You can delete the downloaded archive now or keep it should you want to start the installation from scratch.

Build it!

Environment

To build the package, we will want to first create a temporary environment variable to aid in installation. We'll call INSTALL_DIR.

export INSTALL_DIR=${HOME}/local/numexpr/2.8.4

We are roughly following the convention we use at the system level. This allows us to easily install new versions of software without risking breaking anything that uses older versions. We have specified a folder for the program (numexpr), and for the version (2.8.4). To be consistent with Python installations, we will create a second temporary environment variable that will contain the actual installation location.

export TREE=${INSTALL_DIR}/lib/python3.6/site-packages

Next, make the directory tree.

mkdir -p $TREE

Compile

To compile the package, we should switch to the GNU compilers. The system installation of Python was compiled with the GNU compilers, and this will help avoid any unnecessary complications. We will also load the Python package, if it hasn't already been loaded.

module swap intel gnu module load python/3.6-conda5.2

Next, build it. This step may vary a bit, depending on the package you are compiling. You can execute python setup.py --help to see what options are available. Since we are overriding the install path to one that we can write to and that fits our management plan, we need to use the --prefix option.

NumExpr build also requires us to set the PYTHONPATH variable before building:

export PYTHONPATH=$PYTHONPATH:~/local/numexpr/2.8.4/lib/python3.6/site-packages

Find the setup.py file:

cd numexpr-2.8.4

Now to build:

python setup.py install --prefix=$INSTALL_DIR

Make it usable

At this point, the package is compiled and installed in ~/local/numexpr/2.8.4/lib/python3.6/site-packages. Occasionally, some files will be installed in ~/local/numexpr/2.8.4/bin as well. To ensure Python can locate these files, we need to modify our environment.

Manual

The most immediate way -- but the one that must be repeated every time you wish to use the package -- is to manually modify your environment. If files are installed in the "bin" directory, you'll need to add it to your path. As before, these examples are for bash, and may have to be modified for other shells. Also, you will have to modify the directories to match your install location.

export PATH=$PATH:~/local/numexpr/2.8.4/bin

And for the Python libraries:

export PYTHONPATH=$PYTHONPATH:~/local/numexpr/2.8.4/lib/python3.6/site-packages

Hardcode it

We don't recommend this option, as it is less flexible and can cause conflicts with system software. But if you want, you can modify your .bashrc (or similar file, depending on your shell) to set these environment variables automatically. Be extra careful; making a mistake in .bashrc (or similar) can destroy your login environment in a way that will require a system administrator to fix. To do this, you can copy the lines above modifying $PATH and $PYTHONPATH into .bashrc. Remember to test them interactively first. If you destroy your shell interactively, the fix is as simple as logging out and then logging back in. If you break your login environment, you'll have to get our help to fix it.

Make a module (recommended!)

This is the most complicated option, but it is also the most flexible, as you can have multiple versions of this particular software installed and specify at run-time which one to use. This is incredibly useful if a major feature changes that would break old code, for example. You can see our tutorial on writing modules here, but the important variables to modify are, again, $PATH and $PYTHONPATH. You should specify the complete path to your home directory here and not rely on any shortcuts like ~ or $HOME. Below is a modulefile written in Lua:

If you are following the tutorial on writing modules, you will want to place this file in $HOME/local/share/lmodfiles/numexpr/2.8.4.lua:

-- This is a Lua modulefile, this file 2.8.4.lua can be located anywhere

-- But if you are following a local modulefile location convention, we place them in

-- $HOME/local/share/lmodfiles/

-- For numexpr we place it in $HOME/local/share/lmodfiles/numexpr/2.8.4.lua

-- This finds your home directory

local homedir = os.getenv("HOME")

prepend_path("PYTHONPATH",

pathJoin(homedir, "/local/numexpr/2.8.4/lib/python3.6/site-packages"))

prepend_path(homedir, "local/numexpr/2.8.4/bin")

Once your module is created (again, see the guide), you can use your Python package simply by loading the software module you created.

module use $HOME/local/share/lmodfiles/ module load numexpr/2.8.4

HOWTO: Use GPU with Tensorflow and PyTorch

GPU Usage on Tensorflow

Environment Setup

To begin, you need to first create and new conda environment or use an already existing one. See HOWTO: Create Python Environment for more details. In this example we are using miniconda3/24.1.2-py310 . You will need to make sure your python version within conda matches supported versions for tensorflow (supported versions listed on TensorFlow installation guide), in this example we will use python 3.9.

Once you have a conda environment created and activated we will now install tensorflow into the environment (In this example we will be using version 2.17.0 of tensorflow:

pip install tensorflow==2.17.0

Verify GPU accessability (Optional):

Now that we have the environment set up we can check if tensorflow can access the gpus.

To test the gpu access we will submit the following job onto a compute node with a gpu:

#!/bin/bash

#SBATCH --account <Project-Id>

#SBATCH --job-name Python_ExampleJob

#SBATCH --nodes=1

#SBATCH --time=00:10:00

#SBATCH --gpus-per-node=1

module load miniconda3/24.1.2-py310 cuda/12.3.0

source activate tensorflow_env

# run either of the following commands

python << EOF

import tensorflow as tf

print(tf.test.is_built_with_cuda())

EOF

python << EOF

from tensorflow.python.client import device_lib

print(device_lib.list_local_devices())

EOF

You will know tensorflow is able to successfully access the gpu if tf.test.is_built_with_cuda() returns True and device_lib.list_local_devices() returns an object with /device:GPU:0 as a listed device.

At this point tensorflow-gpu should be setup to utilize a GPU for its computations.

GPU vs CPU

A GPU can provide signifcant performace imporvements to many machine learnings models. Here is an example python script demonstrating the performace improvements. This is ran on the same environment created in the above section.

from timeit import default_timer as timer

import tensorflow as tf

from tensorflow import keras

import numpy as np

(X_train, y_train), (X_test, y_test) = keras.datasets.cifar10.load_data()

# scaling image values between 0-1

X_train_scaled = X_train/255

X_test_scaled = X_test/255

# one hot encoding labels

y_train_encoded = keras.utils.to_categorical(y_train, num_classes = 10)

y_test_encoded = keras.utils.to_categorical(y_test, num_classes = 10)

def get_model():

model = keras.Sequential([

keras.layers.Flatten(input_shape=(32,32,3)),

keras.layers.Dense(3000, activation='relu'),

keras.layers.Dense(1000, activation='relu'),

keras.layers.Dense(10, activation='sigmoid')

])

model.compile(optimizer='SGD',

loss='categorical_crossentropy',

metrics=['accuracy'])

return model

# GPU

with tf.device('/GPU:0'):

start = timer()

model_cpu = get_model()

model_cpu.fit(X_train_scaled, y_train_encoded, epochs = 1)

end = timer()

print("GPU time: ", end - start)

# CPU

with tf.device('/CPU:0'):

start = timer()

model_gpu = get_model()

model_gpu.fit(X_train_scaled, y_train_encoded, epochs = 1)

end = timer()

print("CPU time: ", end - start)

Example code sampled from here

The above code was then submitted in a job with the following script:

#!/bin/bash

#SBATCH --account <Project-Id>

#SBATCH --job-name Python_ExampleJob

#SBATCH --nodes=1

#SBATCH --time=00:10:00

#SBATCH --gpus-per-node=1

module load miniconda3/24.1.2-py310 cuda/12.3.0

source activate tensorflow_env

python tensorflow_example.py

As we can see from the output, the GPU provided a signifcant performace improvement.

GPU time: 3.7491355929996644 CPU time: 78.8043485119997

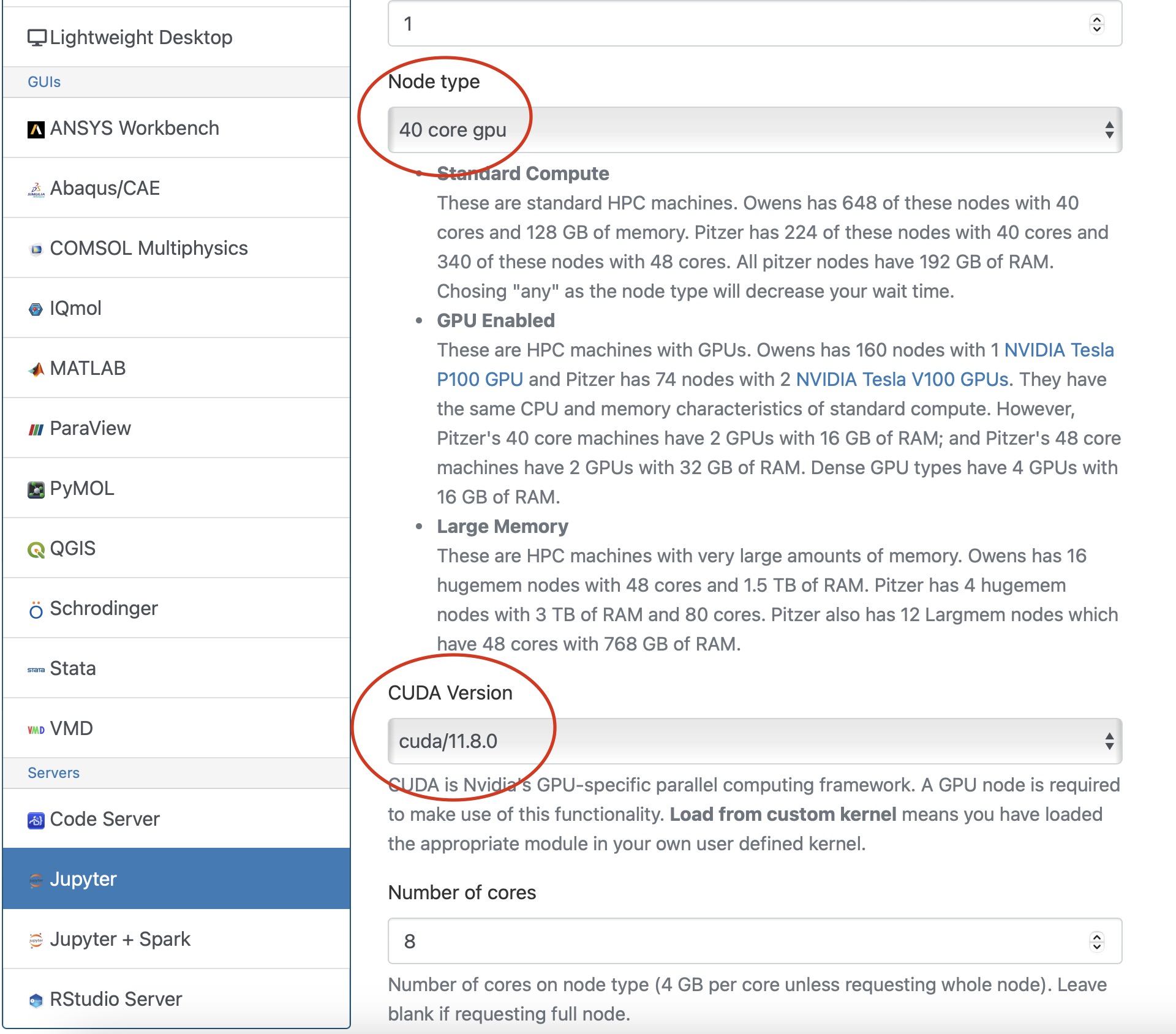

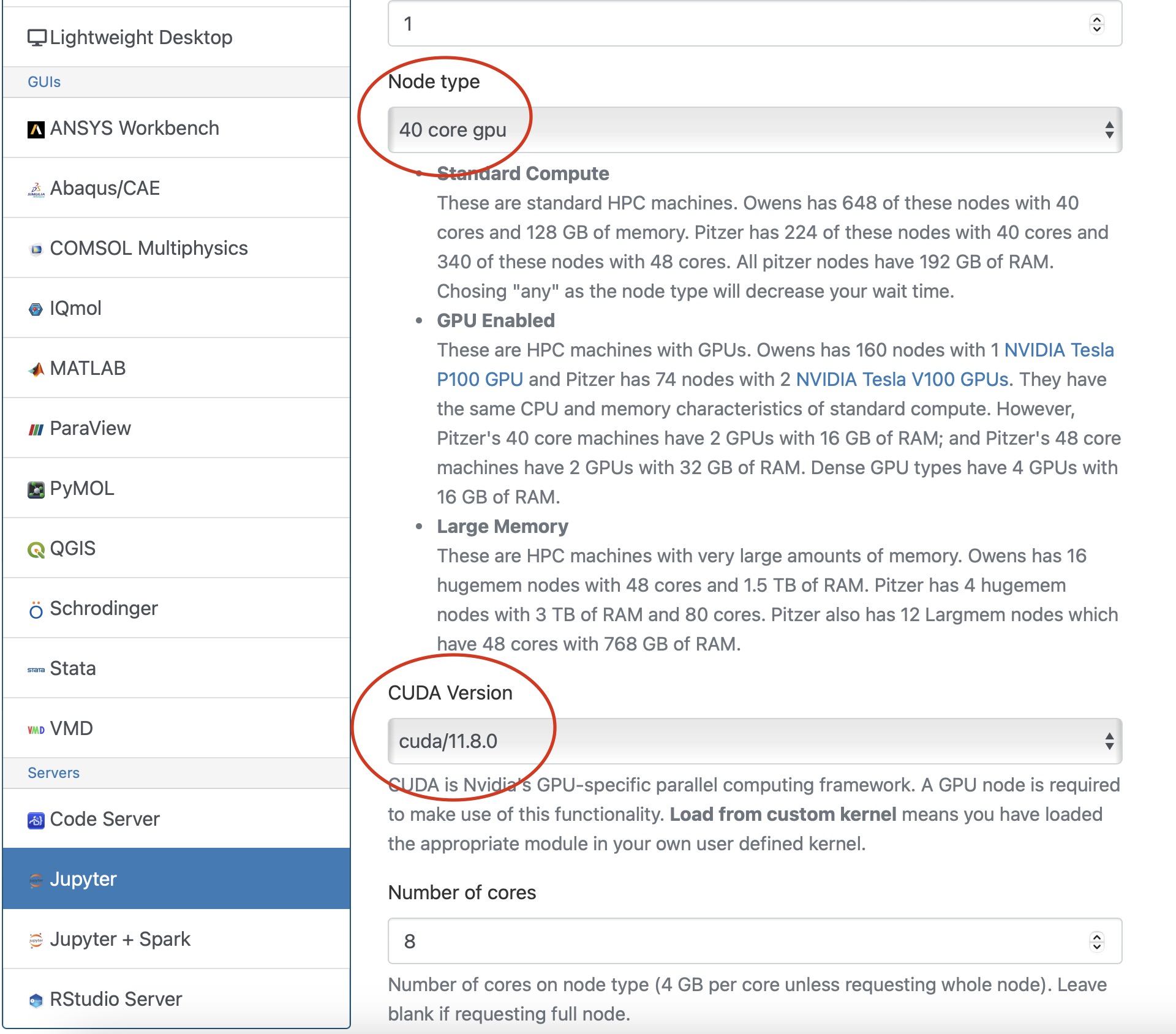

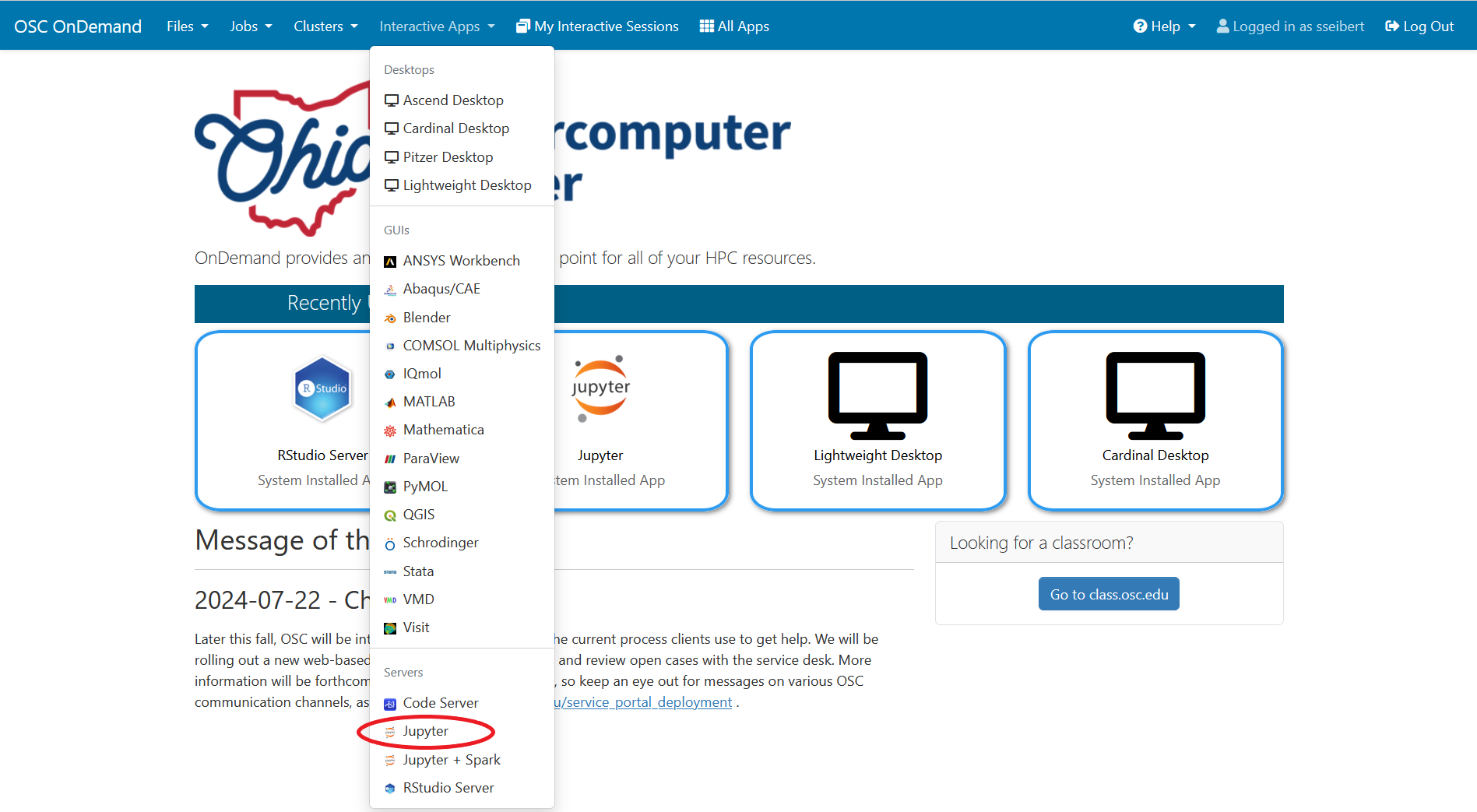

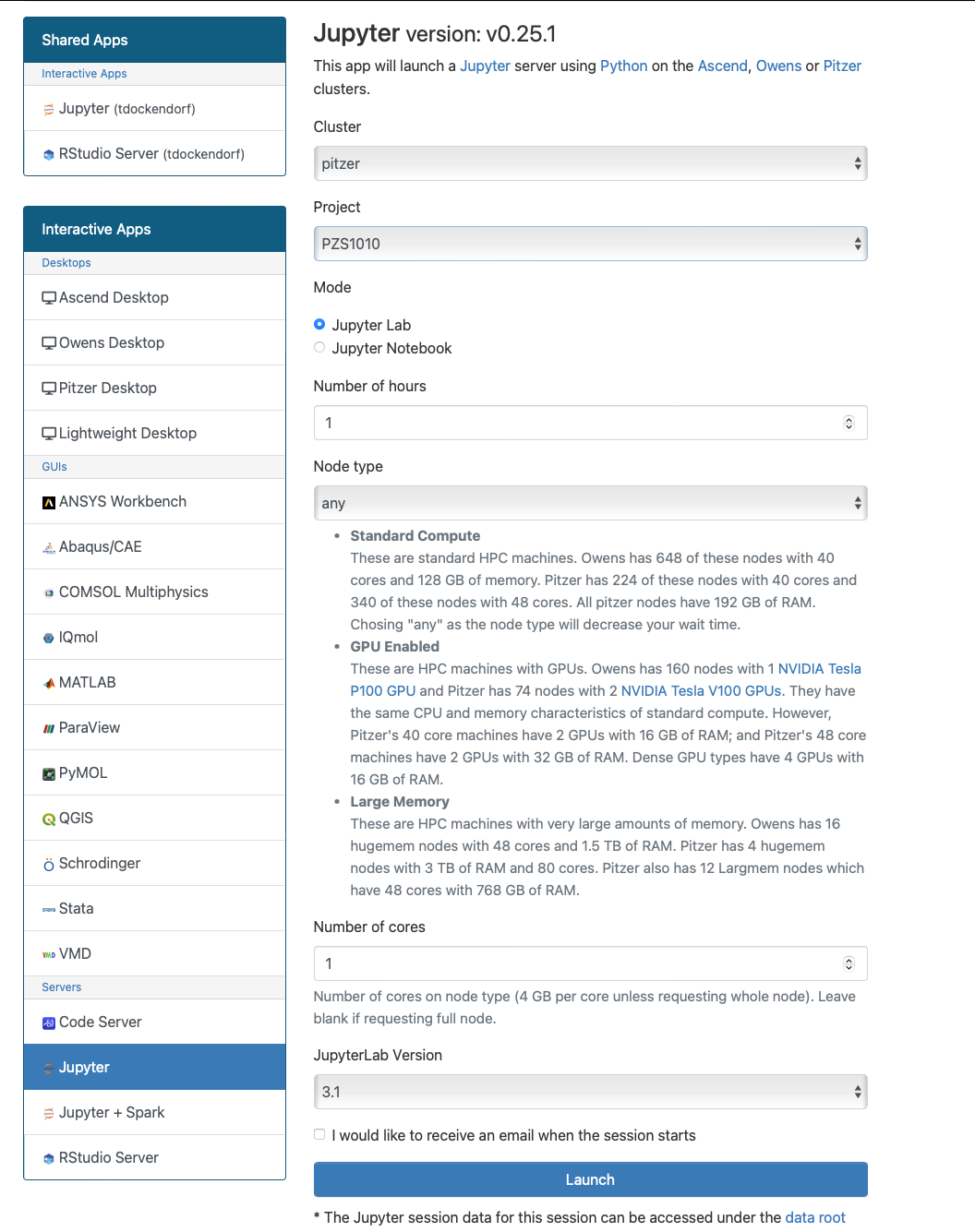

Usage on Jupyter

If you would like to use a gpu for your tensorflow project in a jupyter notebook follow the below commands to set up your environment.

To begin, you need to first create and new conda environment or use an already existing one. See HOWTO: Create Python Environment for more details. In this example we are using python/3.6-conda5.2

Once you have a conda environment created and activated we will now install tensorflow-gpu into the environment (In this example we will be using version 2.4.1 of tensorflow-gpu:

conda install tensorflow-gpu=2.4.1

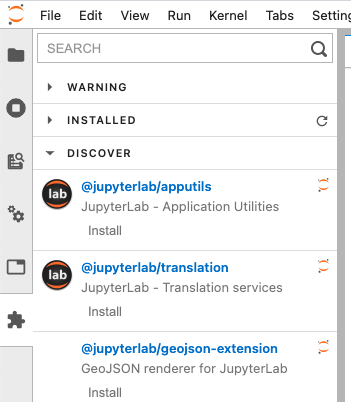

Now we will setup a jupyter kernel. See HOWTO: Use a Conda/Virtual Environment With Jupyter for details on how to create a jupyter kernel with your conda environment.

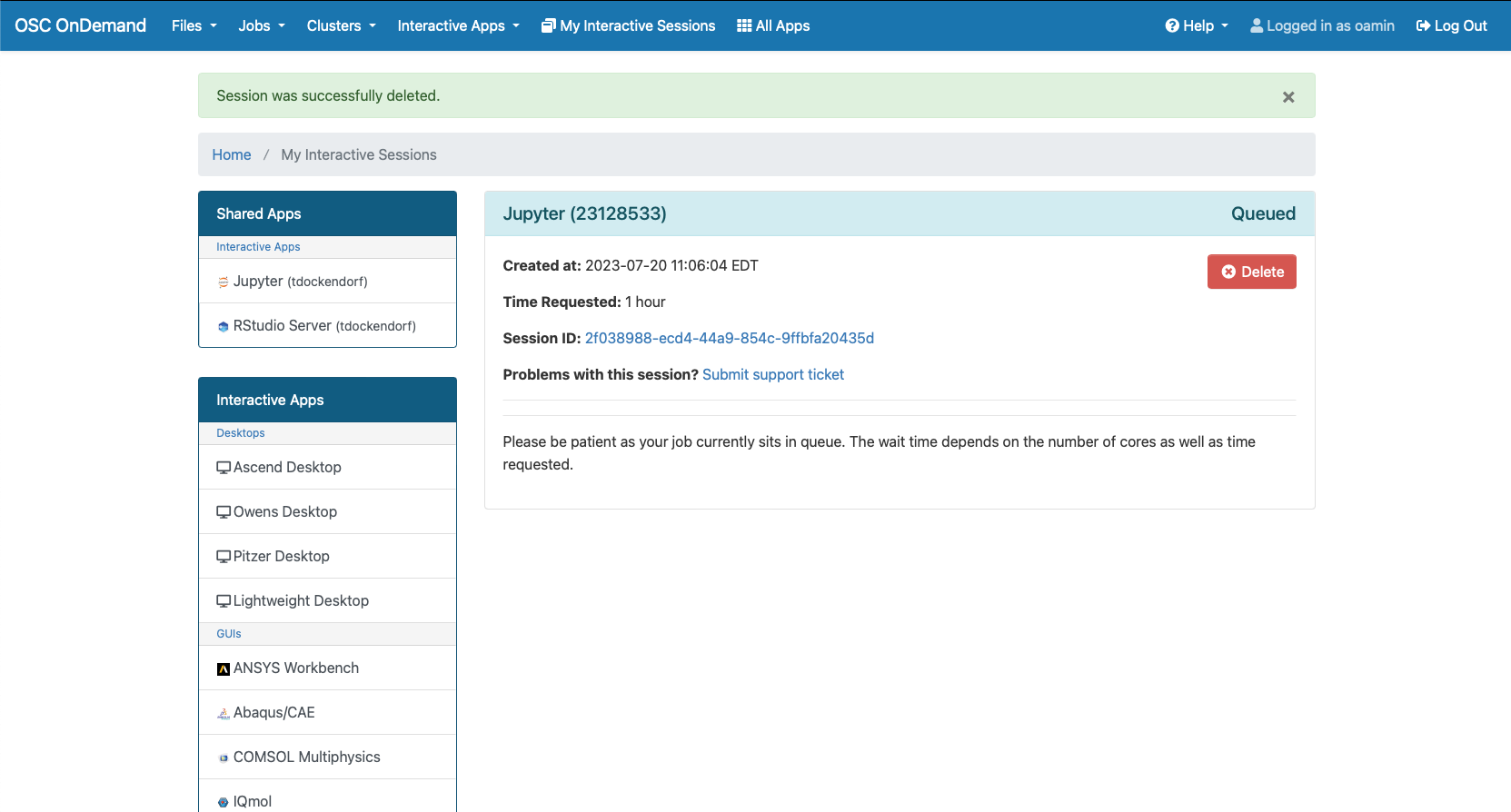

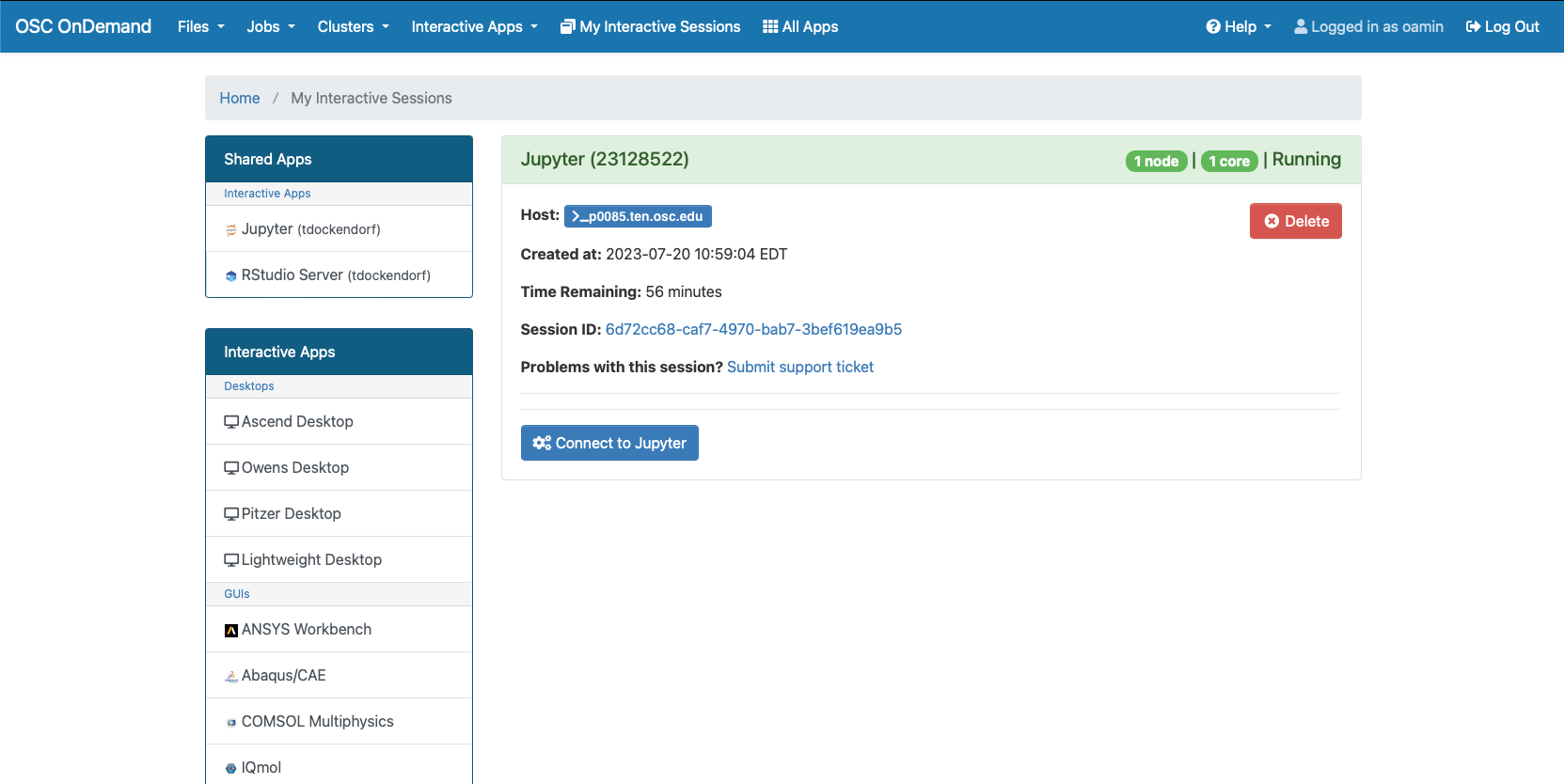

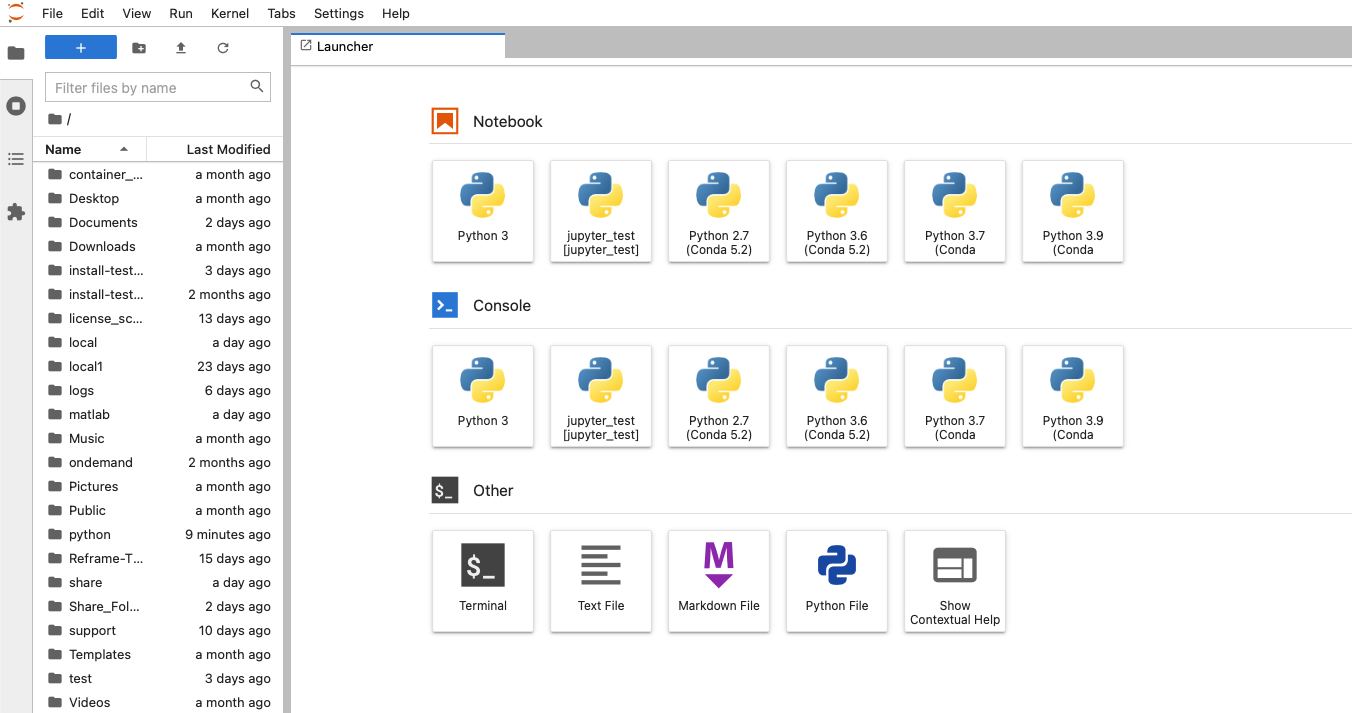

Once you have the kernel created see Usage section of Python page for more details on accessing the Jupyter app from OnDemand.

Now you are all setup to use a gpu with tensorflow on a juptyer notebook.

GPU Usage on PyTorch

Environment Setup

To begin, you need to first create and new conda environment or use an already existing one. See HOWTO: Create Python Environment for more details. In this example we are using miniconda3/24.1.2-py310

Once you have a conda environment created and activated we will now install pytorch into the environment (In the example we will be using version 2.3.0 of pytorch:

conda install pytorch=2.3.0

Verify GPU accessability (Optional):

Now that we have the environment set up we can check if pytorch can access the gpus.

To test the gpu access we will submit the following job onto a compute node with a gpu:

#!/bin/bash

#SBATCH --account <Project-Id>

#SBATCH --job-name Python_ExampleJob

#SBATCH --nodes=1

#SBATCH --time=00:10:00

#SBATCH --gpus-per-node=1

module load miniconda3/24.1.2-py310 cuda/12.3.0

source activate pytorch_env

python << EOF

import torch

print(torch.cuda.is_available())

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

print(device)

EOF

You will know pytorch is able to successfully access the gpu if torch.cuda.is_available() returns True and torch.device("cuda:0" if torch.cuda.is_available() else "cpu") returns cuda:0 .

At this point PyTorch should be setup to utilize a GPU for its computations.

GPU vs CPU

Here is an example pytorch script demonstrating the performace improvements from GPUs

import torch

from timeit import default_timer as timer

# check for cuda availability

print("Cuda: ", torch.cuda.is_available())

device = torch.device("cuda:0" if torch.cuda.is_available() else "cpu")

print("Device: ", device)

#GPU

b = torch.ones(4000,4000).cuda() # Create matrix on GPU memory

start_time = timer()

for _ in range(1000):

b += b

elapsed_time = timer() - start_time

print('GPU time = ',elapsed_time)

#CPU

a = torch.ones(4000,4000) # Create matrix on CPU memory

start_time = timer()

for _ in range(1000):

a += a

elapsed_time = timer() - start_time

print('CPU time = ',elapsed_time)

The above code was then submitted in a job with the following script:

#!/bin/bash

#SBATCH --account <Project-Id>

#SBATCH --job-name Python_ExampleJob

#SBATCH --nodes=1

#SBATCH --time=00:10:00

#SBATCH --gpus-per-node=1

module load miniconda3/24.1.2-py310 cuda/12.3.0

source activate pytorch_env

python pytorch_example.py

As we can see from the output, the GPU provided a signifcant performace improvement.

GPU time = 0.0053490259997488465 CPU time = 4.232843188998231

Usage on Jupyter

If you would like to use a gpu for your PyTorch project in a jupyter notebook follow the below commands to set up your environment.

To begin, you need to first create and new conda environment or use an already existing one. See HOWTO: Create Python Environment for more details. In this example we are using miniconda3/24.1.2-py310

Once you have a conda environment created and activated we will now install pytorch into the environment (In the example we will be using version 2.3.0 of pytorch:

conda install pytorch=2.3.0

You also may need to install numba for PyTorch to access a gpu from the jupter notebook.

conda install numba=0.60.0

Now we will setup a jupyter kernel. See HOWTO: Use a Conda/Virtual Environment With Jupyter for details on how to create a jupyter kernel with your conda environment.

Once you have the kernel created see Usage section of Python page for more details on accessing the Jupyter app from OnDemand.

Now you are all setup to use a gpu with PyTorch on a juptyer notebook.

Horovod

If you are using Tensorflow or PyTorch you may want to also consider using Horovod. Horovod will take single-GPU training scripts and scale it to train across many GPUs in parallel.

HOWTO: Debugging Tips

This article focuses on debugging strategies for C/C++ codes, but many are applicable to other languages as well.

Rubber Duck Debugging

This approach is a great starting point. Say you have written some code, and it does not do what you expect it to do. You have stared at it for a few minutes, but you cannot seem to spot the problem.

Try explaining what the problem is to a rubber duck. Then, walk the rubber duck through your code, line by line, telling it what it does. Don’t have a rubber duck? Any inanimate object will do (or even an animate one if you can grab a friend).

It sounds silly, but rubber duck debugging helps you to get out of your head, and hopefully look at your code from a new perspective. Saying what your code does (or is supposed to do) out loud has a good chance of revealing where your understanding might not be as good as you think it is.

Printf() Debugging

You’ve written a whole bunch of new code. It takes some inputs, chugs along for a while, and then creates some outputs. Somewhere along this process, something goes wrong. You know this because the output is not at all what you expected. Unfortunately, you have no idea where things are going wrong in the code.

This might be a good time to try out printf() debugging. It’s as simple as its name implies: simply add (more) printf() statements to your code. You’ve likely seen this being used. It’s the name given to the infamous ‘printf(“here”);’ calls used to verify that a particular codepath is indeed taken.

Consider printing out arguments and return values to key functions. Or, the results or summary statistics from large calculations. These values can be used as “sanity checks” to ensure that up until that point in the code, everything is going as expected.

Assertion calls, such as "assert(...)", can also be used for a similar purpose. However, often the positive feedback you get from print statements is helpful in when you’re debugging. Seeing a valid result printed in standard out or a log file tells you positively that at least something is working correctly.

Debuggers

Debuggers are tools that can be used to interactively (or with scripts) debug your code. A fairly common debugger for C and C++ codes is gdb. Many guides exist online for using gdb with your code.

OSC systems also provide the ARM DDT debugger. This debugger is designed for use with HPC codes and is arguably easier to use than gdb. It can be used to debug MPI programs as well.

Debuggers allow you to interact with the program while it is running. You can do things like read and write variable values, or check to see if/when certain functions are called.

Testing

Okay, this one isn’t exactly a debugging strategy. It’s a method to catch bugs early, and even prevent the addition of bugs. Writing a test suite for your code that’s easy to run (and ideally fast) lets you test new changes to ensure they don’t break existing functionality.

There are lots of different philosophies on testing software. Too many to cover here. Here’s two concepts that are worth looking into: unit testing and system testing.

The idea behind unit testing is writing tests for small “units” of code. These are often functions or classes. If you know that the small pieces that make up your code work, then you’ll have more confidence in the overall assembled program. There’s an added architecture benefit here too. Writing code that is testable in the first place often results in code that’s broken up into separate logical pieces (google “separation of concerns”). This makes your code more modular and less “spaghetti-like”. Your code will be easier to modify and understand.

The second concept – system testing – involves writing tests that run your entire program. These often take longer than unit tests, but have the added benefit that they’ll let you know whether or not your entire program still works after introducing a new change.

When writing tests (both system and unit tests), it’s often helpful to include a couple different inputs. Occasionally a program may work just fine for one input, but fail horribly with another input.

Minimal, Reproducible Example

Maybe your code takes a couple hours (or longer…) to run. There’s a bug in it, but every time you try to fix it, you have to wait a few hours to see if the fix worked. This is driving you crazy.

A possible approach to make your life easier is to try to make a Minimal, Reproducible Example (see this stackoverflow page for information).

Try to extract just the code that fails, from your program, and also its inputs. Wrap this up into a separate program. This allows you to run just the code that failed, hopefully greatly reducing the time it takes to test out fixes to the problem.

Once you have this example, can you make it smaller? Maybe take out some code that’s not needed to reproduce the bug, or shrink the input even further? Doing this might help you solve the problem.

Tools and other resources

- Compiler warnings – compilers are your friend. Chances are your compiler has a flag that can be used to enable more warnings than are on by default. GNU tools have “-Wall” and “-Wextra”. These can be used to instruct the compiler to tell you about places in the code where bugs may exist.

- The Practice of Programming by Brian Kernighan and Rob Pike contains a very good chapter on debugging C and C++ programs.

- Valgrind is a tool that can be used for many types of debugging including looking for memory corruptions and leaks. However, it slows down your code a very sizeable amount. This might not be feasible for HPC codes

- ASAN (address sanitizer) is another tool that can be used for memory debugging. It is less featureful than Valgrind, but runs much quicker, and so will likely work with your HPC code.

HOWTO: Establish durable SSH connections

In December 2021 OSC updated its firewall to enhance security. As a result, SSH sessions are being closed more quickly than they used to be. It is very easy to modify your SSH options in the client you use to connect to OSC to keep your connection open.

In ~/.ssh/config (use the command touch ~/.ssh/config to create it if there is no exisitng one), you can set 3 options:

TCPKeepAlive=no ServerAliveInterval=60 ServerAliveCountMax=5

Please refer to your SSH client documentation for how to set these options in your client.

HOWTO: Estimating and Profiling GPU Memory Usage for Generative AI

Overview

Estimating GPU memory (VRAM) usage for training or running inference with large deep learning models is critical to both 1. requesting the appropriate resources for running your computation and 2. optimizing your job once it is setup. Out-of-memory (OOM) errors can be avoided by requesting appropriate resources and by better understanding memory usage during the job using memory profiling tools described here.

Estimating GPU Memory Usage for Inference

Estimated GPU VRAM in GB = 2x model parameters (in billions) + 1x context length (in thousands)

For example, for StableCode with 3 billion parameters and 16k context length, we estimate 6GB for model weights + 16GB for overhead, totaling 22 GB estimated to run inference. A model like this should fit on an A100 or H100 for inference.

This estimate assumes fp16 (half-precision). Quantization to lower precisions (8-bit, 4-bit, etc) will reduce memory requirements.

Estimating GPU Memory Usage for Training

Estimated GPU VRAM in GB = 40x model parameters (in billions)

For example, for LLaMA-3 with 7 billion parameters, we estimate minimum 280GB to train it. This exceeds the VRAM of even a single H100 accelerator, requiring distributed training. See HOWTO: PyTorch Fully Sharded Data Parallel (FSDP) for more details.

Of note, the training estimate assumes transformer-based architecture with Adam optimizer using mixed-precision (32bit and 16bit weights used) and is extrapolated from results here: Microsoft Deepspeed.

Activation checkpointing can reduce the memory demands, at the cost of increasing runtime.

Example GPU Memory Usage for Selected Models

| Model Name | Parameter count (billions) | Training / Inference | Batch Size | Context Length | min GPUs required | GPU Memory Usage (GB) |

|---|---|---|---|---|---|---|

| minGPT (GPT-2) | 0.12 | training | 216 | 1 V100 (16GB) | 9 | |

| T5 (small) | 3 | training | 4 | 1 H100 (94GB) | 81 | |

| T5 (medium) | 11 |

training |

4 | 8 H100s (94GB) | 760 | |

| Stable-Code-3b | 3 | inference (vllm) | 256 | 16k | 1 A100 (40GB) |

Model weights: 6GB Total usage: 7 (eager mode with 1k context length, 0.18 gpu), 10 (eager mode with 10k context length, 0.25 gpu), 14 (using 0.37 gpu), 20 (using 0.5 gpu), 36 (using 0.9 default gpu) |

| Falcon-7b-Instruct | 7 | inference (vllm) | 256 | 2k | 1 A100 (40GB) |

Model weights: 13GB Total usage: 15 (using 0.37 gpu), 20 (using 0.5 gpu), 36 (using 0.9 default) |

| CodeLlama-13b-Instruct-hf | 13 | inference (vllm) | 256 | 10k | 1 A100 (40GB) |

Model weights: 25GB |

| Gemma3:12b | 12 | inference (Ollama) | 512 | 2k | 1 V100 (16GB) | 11 |

Training memory usage was obtained from Prometheus data. Inference usage was measured with nvidia-smi and vllm or Ollama. Training usage generally follows the estimates above. Inference usage varies considerably depending on parameter size, context length and dtype precision. Vllm also uses a pre-allocation strategy which can increase estimated usage if left at its default 0.9. Eager mode vs CUDA graph mode trades memory footprint for speed.

Profiling GPU Memory Usage During Computation

There are a number of tools that can be used to gather more information about your job's GPU memory usage. Detailed memory usage can be helpful in debugging and optimizing your application to reduce memory footprint and increase performance.

GPU Usage script

The get_gpu_usage script is available on all OSC clusters. Start with this usage script to determine the maximum memory requirements of your job. Once your job has completed, provide the SLURM job ID (and optionally cluster name) to get the maximum memory usage on each GPU used on your job. For example,

$ get_gpu_usage -M cardinal 477503 Host c0813 GPU #0: 19834 MB Host c0813 GPU #1: 33392 MB Host c0813 GPU #2: 28260 MB Host c0813 GPU #3: 28244 MB Host c0823 GPU #0: 19808 MB Host c0823 GPU #1: 33340 MB Host c0823 GPU #2: 28260 MB Host c0823 GPU #3: 28244 MB

Nvidia-smi Usage

nvidia-smi is a command-line tool available on all GPU-enabled compute nodes that lists processes and their GPU memory usage. Without any arguments, the output looks like the following:

[username@p0254 ~]$ nvidia-smi Wed Nov 13 20:58:25 2024 +-----------------------------------------------------------------------------------------+ | NVIDIA-SMI 550.90.12 Driver Version: 550.90.12 CUDA Version: 12.4 | |-----------------------------------------+------------------------+----------------------+ | GPU Name Persistence-M | Bus-Id Disp.A | Volatile Uncorr. ECC | | Fan Temp Perf Pwr:Usage/Cap | Memory-Usage | GPU-Util Compute M. | | | | MIG M. | |=========================================+========================+======================| | 0 Tesla V100-PCIE-16GB On | 00000000:3B:00.0 Off | Off | | N/A 27C P0 37W / 250W | 13830MiB / 16384MiB | 0% Default | | | | N/A | +-----------------------------------------+------------------------+----------------------+ +-----------------------------------------------------------------------------------------+ | Processes: | | GPU GI CI PID Type Process name GPU Memory | | ID ID Usage | |=========================================================================================| | 0 N/A N/A 27515 C .../vllm_env/bin/python 13818MiB | +-----------------------------------------------------------------------------------------+

The example output above shows a V100 on a Pitzer compute node running a vllm inference server running a 3 billion parameter model and using about 14GB of GPU memory.

Summary statistics are available at the top, showing the GPUs available and their current and max memory available. Below, all running processes are shown, with the relevant GPU, PID, Process name, and GPU Memory Usage for that process.

The tool will show multiple GPU devices on the same node if more than one is available, but is limited to one node.

Additional arguments are available, as described in the official documentation.

To run nvidia-smi on the correct node, you will need to ssh to the node where your job is running. You can find the node hostname using the squeue command:

[username@pitzer-login02 ~]$ squeue -u username

JOBID PARTITION NAME USER ST TIME NODES NODELIST(REASON)

32521417 gpudebug- interact username R 0:38 1 p0254

where "username" is your username. In the example above, "p0254" is the compute node you need to run the tool on. The jobid is also useful for other monitoring tools. See HOWTO: Monitoring and Managing Your Job for more details.

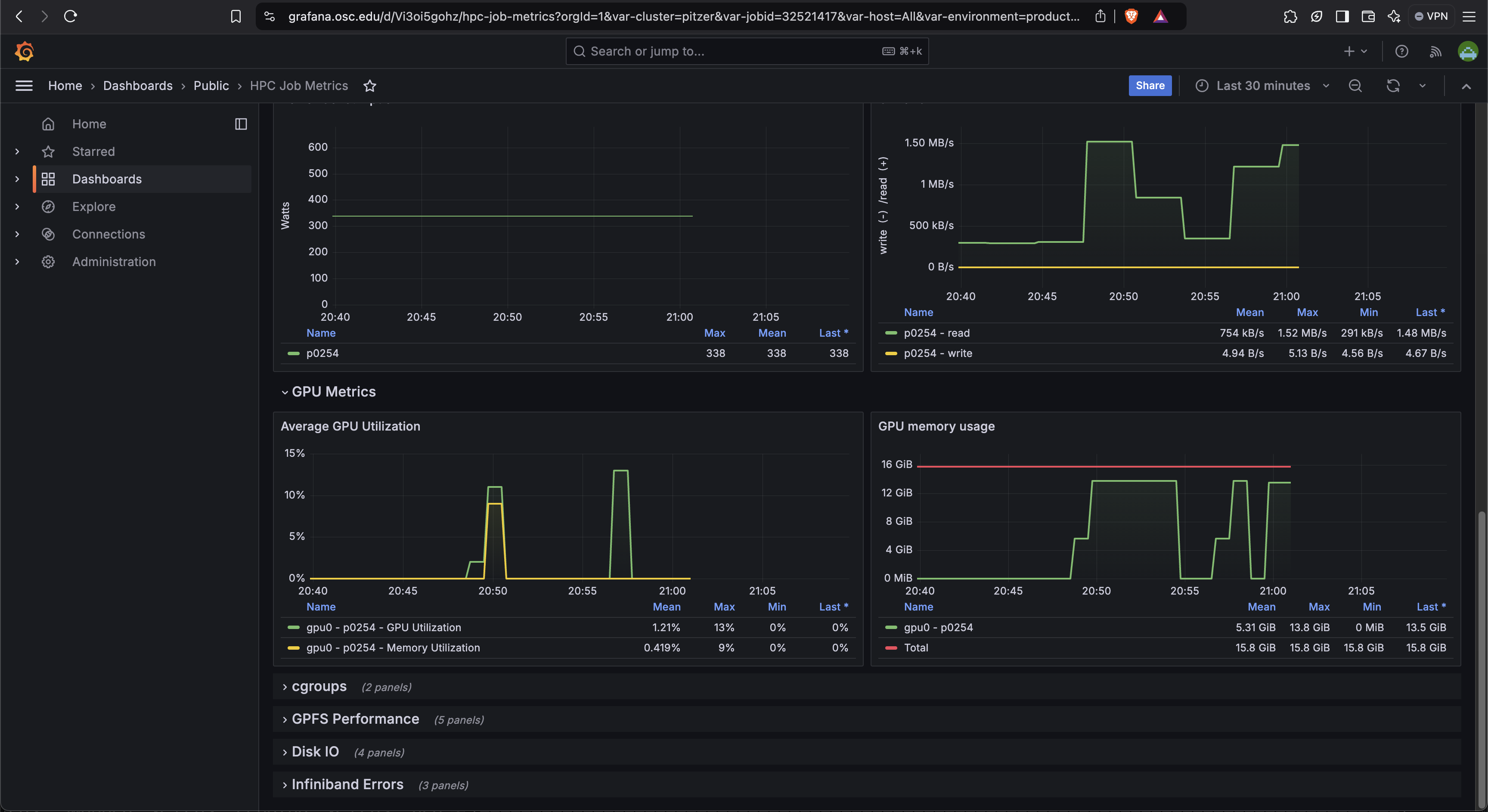

Grafana Dashboard Metrics

Grafana provides a dashboard that shows a timeline of GPU memory and usage over time during your job. The script job-dashboard-link.py

This can give you an idea of when in your job the memory usage peaked and how long it stayed there.

PyTorch memory snapshotting

This tool requires the following minor modifications to your code

- Start:

torch.cuda.memory._record_memory_history(max_entries=100000) - Save:

torch.cuda.memory._dump_snapshot(file_name) - Stop:

torch.cuda.memory._record_memory_history(enabled=None)

This creates a trace file that can viewed by using the javascript code available here. This trace contains information about which called memory allocations and deallocations. This information is sufficient in most cases to understand the memory behavior of your applications. The following two tools can be used to provide additional information, but are only recommended for advanced users.

See documentation here for more information on how to snapshot GPU memory usage while running PyTorch code.

PyTorch Profiler

"PyTorch Profiler is a tool that allows the collection of performance metrics during training and inference. Profiler’s context manager API can be used to better understand what model operators are the most expensive, examine their input shapes and stack traces, study device kernel activity and visualize the execution trace."

The PyTorch profiler also requires code modifications. It provides a suite of configuration options for what information to track and how to export it. The overhead (both in terms of slowing down your job and the size of the profile files) can get very large. There are multiple ways to view the profile data (tensorboard, HTA, chrome browser, etc). At time of writing (2/18/25), tensorboard support has been officially deprecated while HTA is still experimental.

See PyTorch Profiler documentation here.

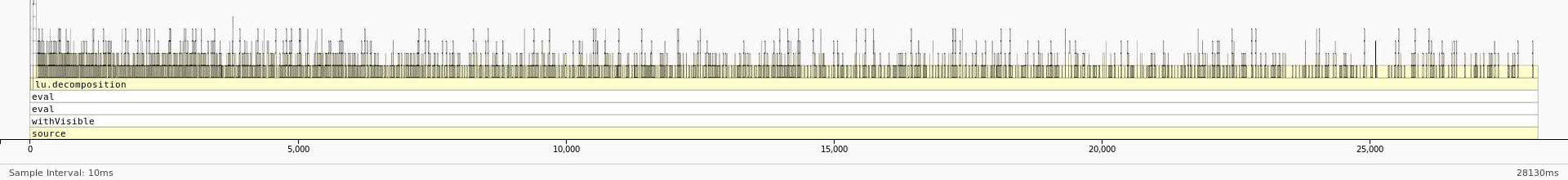

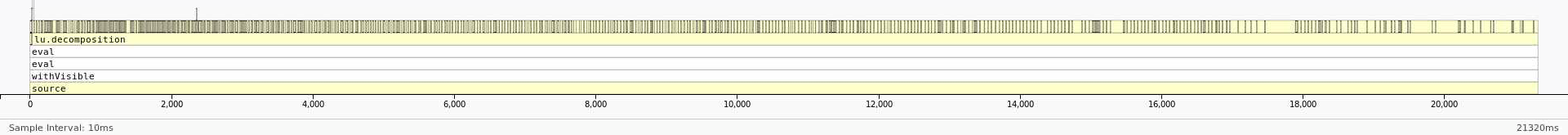

Here is an example walkthrough using both tools.

NVIDIA Nsight Systems

This profiler provides detailed hardware-level information about what the GPU did during your job. It can be challenging to map hardware events to user-level functions when using Nsight, particularly for Python-based codes. This is only recommended for advanced users. Documention from NVIDIA on how to use Nsight Systems is available here.

Solving GPU Out-of-Memory Errors

While there is no one-size-fits-all solution to solving OOM errors, here are a few guidelines on how to reduce GPU memory usage. If you require assistance, please contact OSC Support.

HOWTO: Identify users on a project account and check status

An eligible principal investigator (PI) heads a project account and can authorize/remove user accounts under the project account (please check our Allocations and Accounts documentation for more details). This document shows you how to identify users on a project account and check the status of each user.

Identify Users on a Project Account

If you know the project acccount

If the project account (projectID) is known, the OSCgetent command will list all users on the project:

$ OSCgetent group projectID

The returned information is in the format of:

projectID:*:gid: list of user IDs

gid is the group identifier number unique for the project account projectID.

For example, the command OSCgetent group PZS0712 lists all users on the project account PZS0712 as below:

$ OSCgetent group PZS0712 PZS0712:*:5513:amarcum,guilfoos,hhamblin,kcahill,xwang

Multiple groups can also be queried at once.

For Example, the command OSCgetent group PZS0712 PZS0726 lists all users on both PZS0712 and PZS0726:

PZS0712:*:5513:amarcum,guilfoos,hhamblin,kcahill,xwang PZS0726:*:6129:amarcum,kkappel

Details on a project can also be obtained along with the user list using the OSCfinger command.

$ OSCfinger -g projectID

This returns:

Group: projectID GID: XXXX Status: 'active/restricted/etc' Type: XX Principal Investigator: 'PI email' Admins: NA Members: 'list of users' Category: NA Institution: 'affliated institution' Description: 'short description' ---

If you don't know the project acccount, but know the username

If the project account is not known, but the username is known, use the OSCfinger command to list all of the groups the user belongs to:

OSCfinger username

The returned information is in the format of:

Login: username Name: First Last Directory: home directory path Shell: /bin/bash E-mail: user's email address Primary Group: user's primary project Groups: list of projects and other groups user is in Password Changed: date password was last changed Password Expires: date password expires Login Disabled: TRUE/FALSE Password Expired: TRUE/FALSE Current Logins: Displays if user is currently logged in and from where/when

For example, with the username as amarcum, the command OSCfinger amarcum returns the information as below:

$ OSCfinger amarcum Login: amarcum Name: Antonio Marcum Directory: /users/PZS0712/amarcum Shell: /bin/bash E-mail: amarcum@osc.edu Primary Group: PZS0712 Groups: sts,ruby,l2supprt,oscall,clntstf,oscstaff,clntall,PZS0712,PZS0726 Password Changed: May 12 2019 15:47 (calculated) Password Expires: Aug 11 2019 12:05 AM Login Disabled: FALSE Password Expired: FALSE Current Logins: On since Mar 07 2019 12:12 on pts/14 from pitzer-login01.hpc.osc.edu ----

If you don't know either the project account or user account

If the project account or username is not known, use the OSCfinger -e command with the '-e' flag to get the user account based on the user's name.

Use the following command to list all of the user accounts associated with a First and Last name:

$ OSCfinger -e 'First Last'

For example, with user's first name as Summer and last name as Wang, the command

OSCfinger -e 'Summer Wang' returns the information as below:

$ OSCfinger -e 'Summer Wang' Login: xwang Name: Summer Wang Directory: /users/oscgen/xwang Shell: /bin/bash E-mail: xwang@osc.edu Primary Group: PZS0712 Groups: amber,abaqus,GaussC,comsol,foampro,sts,awsmdev,awesim,ruby,matlab,aasheats,mars,ansysflu,wrigley,lgfuel,l2supprt,fsl,oscall,clntstf,oscstaff,singadm,clntall,dhgremot,fsurfer,PZS0530,PCON0003,PZS0680,PMIU0149,PZS0712,PAS1448 Password Changed: Jan 08 2019 11:41 Password Expires: Jul 08 2019 12:05 AM Login Disabled: FALSE Password Expired: FALSE ---

Once you know the user account username, follow the discussions in the previous section identify users on a project to get all user accounts on the project. Please contact OSC Help if you have any questions.

Check the Status of a User

Use the OSCfinger command to check the status of a user account as below:

OSCfinger username

For example, if the username is xwang, the command OSCfinger xwang will return:

$ OSCfinger xwang Login: xwang Name: Summer Wang Directory: /users/oscgen/xwang Shell: /bin/bash E-mail: xwang@osc.edu Primary Group: PZS0712 Groups: amber,abaqus,GaussC,comsol,foampro,sts,awsmdev,awesim,ruby,matlab,aasheats,mars,ansysflu,wrigley,lgfuel,l2supprt,fsl,oscall,clntstf,oscstaff,singadm,clntall,dhgremot,fsurfer,PZS0530,PCON0003,PZS0680,PMIU0149,PZS0712,PAS1448 Password Changed: Jan 08 2019 11:41 Password Expires: Jul 08 2019 12:05 AM Login Disabled: FALSE Password Expired: FALSE ---

- The home directory of xwang is

Directory: /users/oscgen/xwang - The shell of xwang is bash (

Shell: /bin/bash). If the information isShell:/access/denied, it means this user account has been either archived or restricted. Please contact OSC Help if you'd like to reactivate this user account. - xwang@osc.edu is the associated email with the user account xwang; that is, all OSC emails related to the account xwang will be sent to xwang@osc.edu (

Mail forwarded to xwang@osc.edu). Please contact OSC Help if the email address associated with this user account has been changed to ensure important notifications/messages/reminders from OSC may be received in a timely manner.

Check the Usage and Quota of a User's Home Directory/Project Space

All users see their file system usage statistics when logging in, like so:

As of 2018-01-25T04:02:23.749853 userid userID on /users/projectID used XGB of quota 500GB and Y files of quota 1000000 files

The information is from the file /users/reporting/storage/quota/*_quota.txt , which is updated twice a day. Some users may see multiple lines associated with a username, as well as information on project space usage and quota of their Primary project, if there is one. The usage and quota of the home directory of a username is provided by the line including the file server your home directory is on (for more information, please visit Home Directories), while others (generated due to file copy) can be safely ignored.

You can check any user's home directory or a project's project space usage and quota by running:

grep -h 'userID' OR 'projectID' /users/reporting/storage/quota/*_quota.txt

Here is an example of project PZS0712:

$ grep -h PZS0712 /users/reporting/storage/quota/*_quota.txt As of 2019-03-07T13:55:01.000000 project/group PZS0712 on /fs/project used 262 GiB of quota 2048 GiB and 166987 files of quota 200000 files As of 2019-03-07T13:55:01.000000 userid xwang on /fs/project/PZS0712 used 0 GiB of quota 0 GiB and 21 files of quota 0 files As of 2019-03-07T13:55:01.000000 userid dheisterberg on /fs/project/PZS0712 used 262 GiB of quota 0 GiB and 166961 files of quota 0 files As of 2019-03-07T13:55:01.000000 userid amarcum on /fs/project/PZS0712 used 0 GiB of quota 0 GiB and 2 files of quota 0 files As of 2019-03-07T13:55:01.000000 userid root on /fs/project/PZS0712 used 0 GiB of quota 0 GiB and 2 files of quota 0 files As of 2019-03-07T13:55:01.000000 userid guilfoos on /fs/project/PZS0712 used 0 GiB of quota 0 GiB and 1 files of quota 0 files As of 2019-03-07T13:51:23.000000 userid amarcum on /users/PZS0712 used 399.86 MiB of quota 500 GiB and 8710 files of quota 1000000 files

Here is an example for username amarcum:

$ grep -h amarcum /users/reporting/storage/quota/*_quota.txt As of 2019-03-07T13:55:01.000000 userid amarcum on /fs/project/PZS0712 used 0 GiB of quota 0 GiB and 2 files of quota 0 files As of 2019-03-07T13:56:39.000000 userid amarcum on /users/PZS0645 used 4.00 KiB of quota 500 GiB and 1 files of quota 1000000 files As of 2019-03-07T13:56:39.000000 userid amarcum on /users/PZS0712 used 399.86 MiB of quota 500 GiB and 8710 files of quota 1000000 files

Check the Usage for Projects and Users

The OSCusage command can provide detailed information about computational usage for a given project and user.

See the OSCusage command page for details.

HOWTO: Install a MATLAB toolbox

If you need to use a MATLAB toolbox that is not provided through our installations. You can follow these instructions, and if you have any difficulties you can contact OSC Help for assistance.

Gather your materials

First, we recommend making a new directory within your home directory in order to keep everything organized. You can use the unix command to make a new directory: "mkdir"

Now you can download the toolbox either to your desktop, and then upload it to OSC, or directly download it using the "wget" utility (if you know the URL for the file).

Now you can extract the downloaded file.

Adding the path

There are two methods on how to add the MATLAB toolbox path.

Method 1: Load up the Matlab GUI and click on "Set Path" and "Add folder"

Method 2: Use the "addpath" fuction in your script. More information on the function can be found here: https://www.mathworks.com/help/matlab/ref/addpath.html

Running the toolbox

Please refer to the instructions given alongside the toolbox. They should contain instructions on how to run the toolbox.

HOWTO: Install your own Perl modules

While we provide a number of Perl modules, you may need a module we do not provide. If it is a commonly used module, or one that is particularly difficult to compile, you can contact OSC Help for assistance, but we have provided an example below showing how to build and install your own Perl modules. Note, these instructions use "bash" shell syntax; this is our default shell, but if you are using something else (csh, tcsh, etc), some of the syntax may be different.

CPAN Minus

CPAN, the Comprehensive Perl Achive Network, is the primary source for publishing and fetching the latest modules and libraries for the Perl programming language. The default method for installing Perl modules using the "CPAN Shell", provides users with a great deal of power and flexibility but at the cost of a complex configuration and inelegant default setup.

Setting Up CPAN Minus

To use CPAN Minus with the system Perl (version 5.16.3), we need to ensure that the "cpanminus" module is loaded, if it hasn't been loaded already.

module load cpanminus

Please note that this step is not required if you have already loaded a version of Perl using the module load command.

Next, in order to use cpanminus, you will need to run the following command only ONCE:

perl -I $CPANMINUS_INC -Mlocal::lib

Using CPAN Minus

In most cases, using CPAN Minus to install modules is as simple as issuing a command in the following form:

cpanm [Module::Name]

For example, below are three examples of installing perl modules:

cpanm Math::CDF cpanm SET::IntervalTree cpanm DB_File

Testing Perl Modules

To test a perl module import, here are some examples below:

perl -e "require Math::CDF" perl -e "require Set::IntervallTree" perl -e "require DB_File"

The modules are installed correctly if no output is printed.

What Local Modules are Installed in my Account?

To show the local modules you have installed in your user account:

perldoc perllocal

Reseting Module Collection

If you should ever want to start over with your perl module collection, delete the following folders:

rm -r ~/perl5 rm -r ~/.cpanm

HOWTO: Locally Installing Software

Sometimes the best way to get access to a piece of software on the HPC systems is to install it yourself as a "local install". This document will walk you through the OSC-recommended procedure for maintaining local installs in your home directory or project space. The majority of this document describes the process of "manually" building and installing your software. We also show a partially automated approach through the use of a bash script in the Install Script section near the end.

Getting Started

Before installing your software, you should first prepare a place for it to live. We recommend the following directory structure, which you should create in the top-level of your home directory:

local

|-- src

|-- share

`-- lmodfiles

This structure is analogous to how OSC organizes the software we provide. Each directory serves a specific purpose:

local- Gathers all the files related to your local installs into one directory, rather than cluttering your home directory. Applications will be installed into this directory with the format "appname/version". This allows you to easily store multiple versions of a particular software install if necessary.local/src- Stores the installers -- generally source directories -- for your software. Also, stores the compressed archives ("tarballs") of your installers; useful if you want to reinstall later using different build options.local/share/lmodfiles- The standard place to store module files, which will allow you to dynamically add or remove locally installed applications from your environment.

You can create this structure with one command:

mkdir -p $HOME/local/src $HOME/local/share/lmodfiles

(NOTE: $HOME is defined by the shell as the full path of your home directory. You can view it from the command line with the command echo $HOME.)

Installing Software

Now that you have your directory structure created, you can install your software. For demonstration purposes, we will install a local copy of Git.

First, we need to get the source code onto the HPC filesystem. The easiest thing to do is find a download link, copy it, and use the wget tool to download it on the HPC. We'll download this into $HOME/local/src:

cd $HOME/local/srcwget https://github.com/git/git/archive/v2.9.0.tar.gz

Now extract the tar file:

tar zxvfv2.9.0.tar.gz

Next, we'll go into the source directory and build the program. Consult your application's documentation to determine how to install into $HOME/local/"software_name"/"version". Replace "software_name" with the software's name and "version" with the version you are installing, as demonstrated below. In this case, we'll use the configure tool's --prefix option to specify the install location.

You'll also want to specify a few variables to help make your application more compatible with our systems. We recommend specifying that you wish to use the Intel compilers and that you want to link the Intel libraries statically. This will prevent you from having to have the Intel module loaded in order to use your program. To accomplish this, add CC=icc CFLAGS=-static-intel to the end of your invocation of configure. If your application does not use configure, you can generally still set these variables somewhere in its Makefile or build script.

Then, we can build Git using the following commands:

cd git-2.9.0

autoconf # this creates the configure file

./configure --prefix=$HOME/local/git/2.9.0 CC=icc CFLAGS=-static-intel

make && make install

Your application should now be fully installed. However, before you can use it you will need to add the installation's directories to your path. To do this, you will need to create a module.

Creating a Module

Modules allow you to dynamically alter your environment to define environment variables and bring executables, libraries, and other features into your shell's search paths.

Automatically create a module

We can use the mkmod script to create a simple Lua module for the Git installation:

module load mkmod create_module.sh git 2.9.0 $HOME/local/git/2.9.0

It will create the module $HOME/local/share/lmodfiles/git/2.9.0.lua. Please note that by default our mkmod script only creates module files that define some basic environment variables PATH, LD_LIBRARY_PATH, MANPATH, and GIT_HOME. These default variables may not cover all paths desired. We can overwrite these defaults in this way:

module load mkmod TOPDIR_LDPATH_LIST="lib:lib64" \ TOPDIR_PATH_LIST="bin:exe" \ create_module.sh git 2.9.0 $HOME/local/git/2.9.0

This adds $GIT_HOME/bin, $GIT_HOME/exe to PATH and $GIT_HOME/lib , $GIT_HOME/lib64 to LD_LIBRARY_PATH.

We can also add other variables by using ENV1, ENV2, and more. For example, suppose we want to change the default editor to vim for Git:

module load mkmod ENV1="GIT_EDITOR=vim" \ create_module.sh git 2.9.0 $HOME/local/git/2.9.0

Manually create a module

We will be using the filename 2.9.0.lua ("version".lua). A simple Lua module for our Git installation would be:

-- Local Variables local name = "git" local version = "2.9.0" -- Locate Home Directory local homedir = os.getenv("HOME") local root = pathJoin(homedir, "local", name, version)-- Set Basic Paths prepend_path("PATH", pathJoin(root, "bin"))prepend_path("LD_LIBRARY_PATH", root .. "/lib") prepend_path("LIBRARY_PATH", root .. "/lib") prepend_path("INCLUDE", root .. "/include") prepend_path("CPATH", root .. "/include") prepend_path("PKG_CONFIG_PATH", root .. "/lib/pkgconfig")prepend_path("MANPATH", root .. "/share/man")

NOTE: For future module files, copy our sample modulefile from ~support/doc/modules/sample_module.lua. This module file follows the recommended design patterns laid out above and includes samples of many common module operations

NOTE: TCL is cross-compatible and is converted to Lua when loaded. More documentation is available at https://www.tacc.utexas.edu/research-development/tacc-projects/lmod/ or by executing module help.

Initializing Modules

Any module file you create should be saved into your local lmodfiles directory ($HOME/local/share/lmodfiles). To prepare for future software installations, create a subdirectory within lmodfiles named after your software and add one module file to that directory for each version of the software installed.

In the case of our Git example, you should create the directory $HOME/local/share/lmodfiles/git and create a module file within that directory named 2.9.0.lua.

To make this module usable, you need to tell lmod where to look for it. You can do this by issuing the command module use $HOME/local/share/lmodfiles in our example. You can see this change by performing module avail. This will allow you to load your software using either module load git or module load git/2.9.0.

module use$HOME/local/share/lmodfiles and module load "software_name" need to be entered into the command line every time you enter a new session on the system.If you install another version later on (lets say version 2.9.1) and want to create a module file for it, you need to make sure you call it 2.9.1.lua. When loading Git, lmod will automatically load the newer version. If you need to go back to an older version, you can do so by specifying the version you want: module load git/2.9.0.

To make sure you have the correct module file loaded, type which git which should emit "~/local/git/2.9.0/bin/git" (NOTE: ~ is equivalent to $HOME).

To make sure the software was installed correctly and that the module is working, type git --version which should emit "git version 2.9.0".

Automating With Install Script

Simplified versions of the scripts used to manage the central OSC software installations are provided at ~support/share/install-script. The idea is that you provide the minimal commands needed to obtain, compile, and install the software (usually some variation on wget, tar, ./configure, make, and make install) in a script, which then sources an OSC-maintained template that provides all of the "boilerplate" commands to create and manage a directory structure similar to that outlined in the Getting Started section above. You can copy an example install script from ~support/share/install-script/install-osc_sample.sh and follow the notes in that script, as well as in ~support/share/install-script/README.md, to modify it to install software of your choosing.

$HOME/osc_apps/lmodfiles, so you will need to run module use $HOME/osc_apps/lmodfiles and module load [software-name] every time you enter a new session on the system and want to use the software that you have installed.Further Reading

For more information about modules, be sure to read the webpage indicated at the end of module help. If you have any questions about modules or local installations, feel free to contact the OSC Help Desk and oschelp@osc.edu.

HOWTO: Manage Access Control List (ACLs)

An ACL (access control list) is a list of permissions associated with a file or directory. These permissions allow you to restrict access to a certain file or directory by user or group.

OSC supports NFSv4 ACL on our home directory and POSIX ACL on our project and scratch file systems. Please see the how to use NFSv4 ACL for home directory ACL management and how to use POSIX ACL for managing ACLs in project and scratch file systems.

HOWTO: Use NFSv4 ACL

This document shows you how to use the NFSv4 ACL permissions system. An ACL (access control list) is a list of permissions associated with a file or directory. These permissions allow you to restrict access to a certian file or directory by user or group. NFSv4 ACLs provide more specific options than typical POSIX read/write/execute permissions used in most systems.

These commands are useful for managing ACLs in the dir locations of /users/<project-code>.

Understanding NFSv4 ACL

This is an example of an NFSv4 ACL

A::user@nfsdomain.org:rxtncy

A::alice@nfsdomain.org:rxtncy

A::alice@nfsdomain.org:rxtncy

A::alice@nfsdomain.org:rxtncy

The following sections will break down this example from left to right and provide more usage options

ACE Type

The 'A' in the example is known as the ACE (access control entry) type. The 'A' denotes "Allow" meaning this ACL is allowing the user or group to perform actions requiring permissions. Anything that is not explicitly allowed is denied by default.

ACE Flags

The above example could have a distinction known as a flag shown below

A:d:user@osc.edu:rxtncy

The 'd' used above is called an inheritence flag. This makes it so the ACL set on this directory will be automatically established on any new subdirectories. Inheritence flags only work on directories and not files. Multiple inheritence flags can be used in combonation or omitted entirely. Examples of inheritence flags are listed below:

| Flag | Name | Function |

|---|---|---|

| d | directory-inherit | New subdirectories will have the same ACE |

| f | file-inherit | New files will have the same ACE minus the inheritence flags |

| n | no-propogate inherit | New subdirectories will inherit the ACE minus the inheritence flags |

| i | inherit-only | New files and subdirectories will have this ACE but the ACE for the directory with the flag is null |

ACE Principal

The 'user@nfsdomain.org' is a principal. The principle denotes the people the ACL is allowing access to. Principals can be the following:

- A named user

- Example: user@nfsdomain.org

- Special principals

- OWNER@

- GROUP@

- EVERYONE@

- A group

- Note: When the principal is a group, you need to add a group flag, 'g', as shown in the below example

-

A:g:group@osc.edu:rxtncy

ACE Permissions

The 'rxtncy' are the permissions the ACE is allowing. Permissions can be used in combonation with each other. A list of permissions and what they do can be found below:

| Permission | Function |

|---|---|

| r | read-data (files) / list-directory (directories) |

| w | write-data (files) / create-file (directories) |

| a | append-data (files) / create-subdirectory (directories) |

| x | execute (files) / change-directory (directories) |

| d | delete the file/directory |

| D | delete-child : remove a file or subdirectory from the given directory (directories only) |

| t | read the attributes of the file/directory |

| T | write the attribute of the file/directory |

| n | read the named attributes of the file/directory |

| N | write the named attributes of the file/directory |

| c | read the file/directory ACL |

| C | write the file/directory ACL |

| o | change ownership of the file/directory |

Note: Aliases such as 'R', 'W', and 'X' can be used as permissions. These work simlarly to POSIX Read/Write/Execute. More detail can be found below.

| Alias | Name | Expansion |

|---|---|---|

| R | Read | rntcy |

| W | Write | watTNcCy (with D added to directory ACE's) |

| X | Execute | xtcy |

Using NFSv4 ACL

This section will show you how to set, modify, and view ACLs

Set and Modify ACLs

To set an ACE use this command:

nfs4_setfacl [OPTIONS] COMMAND file

To modify an ACE, use this command:

nfs4_editfacl [OPTIONS] file

Where file is the name of your file or directory. More information on Options and Commands can be found below.

Commands

Commands are only used when first setting an ACE. Commands and their uses are listed below.

| COMMAND | FUNCTION |

|---|---|

| -a acl_spec [index] | add ACL entries in acl_spec at index (DEFAULT: 1) |

| -x acl_spec | index | remove ACL entries or entry-at-index from ACL |

| -A file [index] | read ACL entries to add from file |

| -X file | read ACL entries to remove from file |

| -s acl_spec | set ACL to acl_spec (replaces existing ACL) |

| -S file | read ACL entries to set from file |