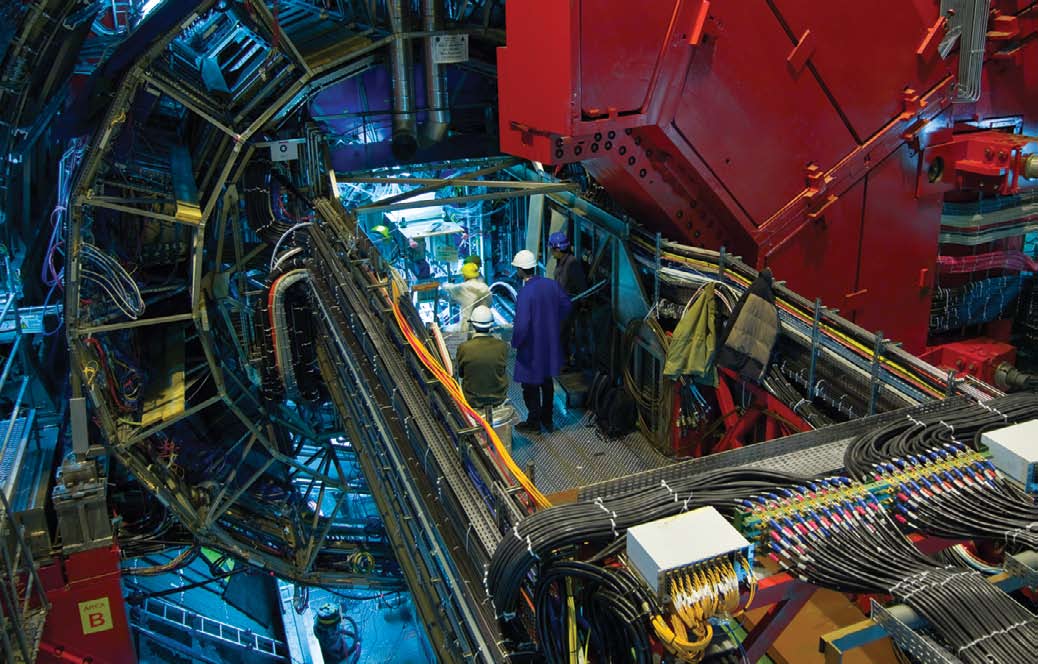

above: When the ALICE experiment collides lead atoms at nearly the speed of light, sophisticated detectors will dump data at the rate of more than one gigabyte per second into a worldwide network of research repositories, including the Ohio Supercomputer Center.

below: Physicists tracked muons from a cosmic shower event in June 2008, partly as a test of ALICE’s Time Projection Chamber, which tracked similar particles when the supercollider runs the first ALICE experiments.

In a lush valley on the border of Switzerland and France, more than 1,000 physicists, engineers, and technicians from 30 countries are working to answer questions about the fundamental nature of matter. The massive physics research project recreated on a small scale within the Large Hadron Collider at CERN, the European Laboratory for Nuclear Research, the explosive first moments of the birth of the universe.

As part of the ALICE experiment, short for A Large Ion Collider Experiment, physicists accelerated lead atoms to nearly the speed of light, collided their nuclei and then visualized the expelled particles that make up the protons and neutrons of the lead nuclei — quarks and gluons. Sensitive detectors measured the particles’ reactions, recording approximately 1.25 gigabytes of data per second — or as much as three DVDs per minute.

To analyze tracking data from up to 8,000 collisions per second, researchers are employing a worldwide network of computing resources, including those of the Ohio Supercomputer Center.

“Traditionally, researchers would do much, if not all, of their computing at one central computing center. This cannot be done with the ALICE experiments because of the large data volumes,” said Thomas J. Humanic, Ph.D., professor of physics at The Ohio State University.

The massive data sets were distributed to researchers around the world through high-speed connections to the “Grid,” a network of computer clusters at scientific institutions, including the Ohio Supercomputer Center.

Beyond serving as a storage and analysis resource for researchers working on the project, “OSC has been critical in the development and testing of a computing model to analyze the ALICE data,” Humanic said.

OSC already has provided 300,000 CPU hours for data simulations and has allocated up to one million hours for analysis of the first experimental data, collected in September, 2008. ALICE already has yielded many valuable second-order benefits in areas such as distributed computing, mass data storage and access, software development, and instrument design.

--

Project leads: Thomas J. Humanic, Ph.D., The Ohio State University; Paul Buerger, Ph.D., OSC; Douglas L. Olson, Ph.D., Lawrence Berkeley National Laboratory; Lawrence Pinsky, Ph.D., University of Houston; Ron Soltz, Ph.D., Lawrence Livermore National Laboratory; & Michael Lisa, The Ohio State University

Research title: Developing extensions to grid computing for ALICE at the LHC

Funding source: National Science Foundation