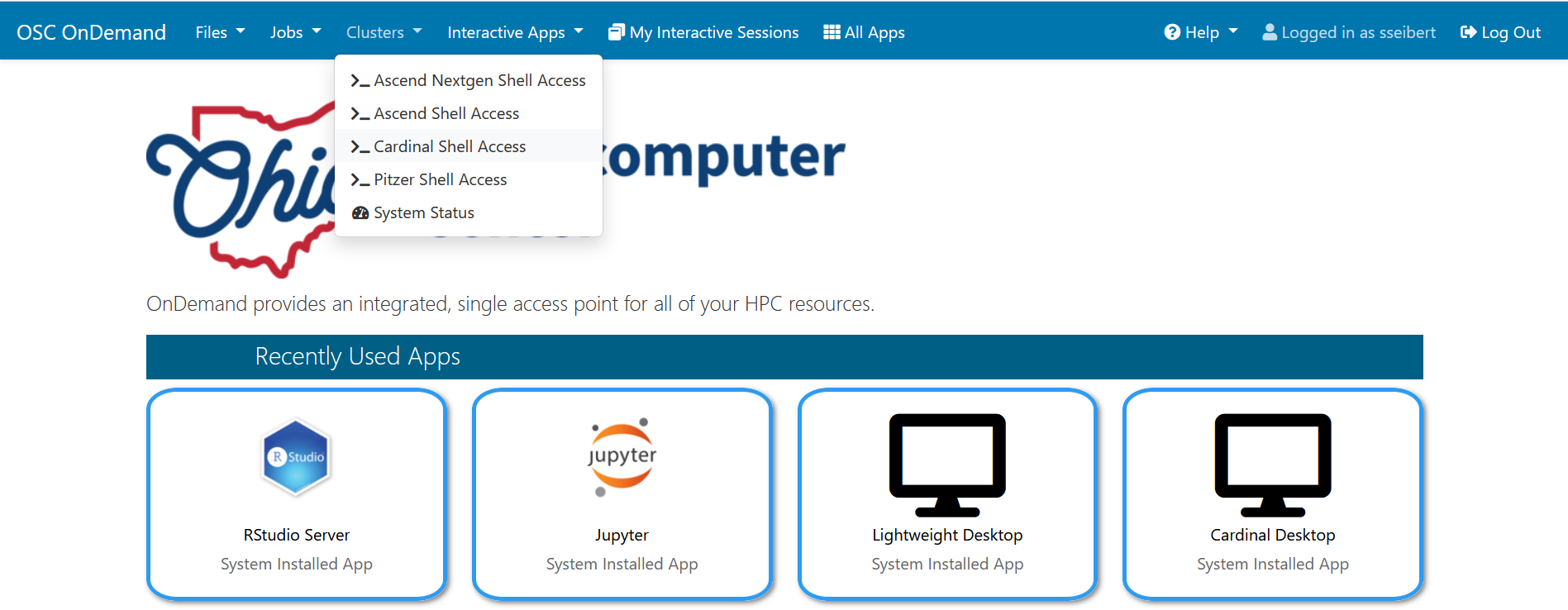

For submitting non-interactive Spark and Hadoop jobs, we are going to access the Cardinal cluster through the shell app after logging into OSC OnDemand. Please click on Clusters, then select Cardinal Shell Acess.

This will open a shell on the Cardinal cluster where you can enter UNIX commands. First, we are going to copy input files required to your home directory. Please enter the following command,

cp -rf /users/PZS0645/support/workshop/Bigdata/Non-interactive $HOME/

This will copy a directory called Non-interactive to your $HOME. First, we will submit two Spark jobs.

cd $HOME/Non-interactive/spark

Check the contents of the directory using ls command

ls

Please use more command to see the content of a file

more stati.sbatch

You can change job parameters like number of nodes, walltime limits etc in .sbatch file.

Submit jobs using the sbatch command

sbatch stati.sbatch sbatch sql.sbatch

Check job status using the squeue command. You will see status under ST tab as PD for pending or R for running or CD for completed.

squeue -u `whoami`

When the jobs are completed, it will generate jobname.error and jobname.out files as output. You can check out the output with more command.

more jobname.out

Next, we will submit hadoop jobs.

cd $HOME/Non-interactive/hadoop ls

Submit jobs using qsub command

sbatch sub-grep.sbatch sbatch sub-wordcount.sbatch

When jobs are completed, two directories grep-out and wordcount-out will be created. To check results,

cd grep-out more part-r-00000 cd ../wordcount-out more part-r-00000