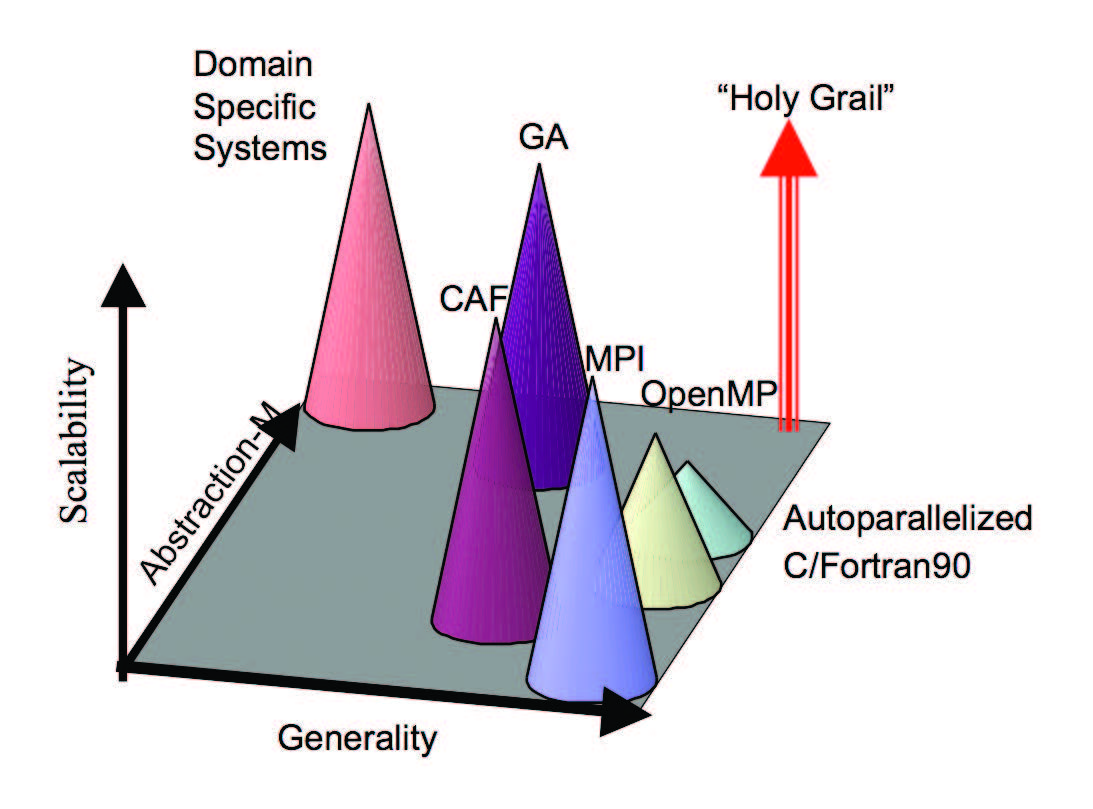

The Partitioned Global Address Space (PGAS) programming model has attracted considerable attention in HPC circles, primarily because it offers application programmers the convenience of globally addressable memory along with the locality control needed for scalability.

This makes the PGAS models an attractive alternative for petascale computing systems, compared to the hybrid MPI/OpenMP model. To further develop the PGAS model, Ponnuswamy Sadayappan, Ph.D., professor of computer science and engineering at The Ohio State University, is developing a collaborative research group involving his team and staff members of the Ohio Supercomputer Center (OSC).

“Although some significant production applications like NWChem have been implemented using the Global Arrays PGAS model, the overall use of PGAS models for developing parallel applications has been very limited,” said Sadayappan. “Several advances are needed for PGAS to become more widely adopted, including compiler/runtime support for enabling existing sequential codes to be transformed and new parallel applications to be developed using PGAS models. The research collaboration with OSC provides significant opportunities to work with the developers of production applications to implement and evaluate our research advances in compiler/runtime systems for PGAS computing.”

The group also will explore the integration of several popular HPC programming languages with the PGAS model, according to Ashok Krishnamurthy, interim co-executive director of OSC. Sadayappan has worked with OSC on a number of funded projects, including development of high-performance parallel software for electronic structure calculations and compiler/runtime optimization techniques for multicore CPUs and GPUs.

--

Project lead: Ponnuswamy Sadayappan, The Ohio State University

Research title: A Parallel Global Address Space framework for petascale computing

Funding source: The Ohio State University, Ohio Supercomputer Center