The cornerstone of the Ohio Supercomputer Center's STEM Institute is team-based, immersive project work. Groups of up to four students collaborate on diverse and challenging research-level projects alongside a project leader (the staff member who conceived and designed the project).

Current SI Projects

OSC OnDemand App Development

OnDemand is OSC's innovative web-based portal for accessing high performance computing services. OnDemand allows clients to use HPC without a big learning curve, lowering the barrier of entry to the world of supercomputing. With OnDemand, OSC users use a web interface to interact with OSC’s supercomputing resources. OnDemand enables users to upload and download files, create, edit, submit, and monitor jobs, run GUI applications, and connect via SSH, all via a web browser, with no client software to install and configure. OnDemand is a special deployment of OSC’s NSF-funded Open OnDemand platform which is currently in use at more than a dozen academic institutions across the US.

In this project, the team will work in groups of two students developing web-based applications to extend OSC OnDemand’s functionality. We will build apps to provide visibility to users and administrators about disk usage and batch jobs on the clusters. Apps we build will include displaying estimates on when queued jobs will start, statistics on historical usage, and apps to display current and historical disk usage. We will also explore building some simple apps to help users submit batch jobs running simulations or using solvers in a variety of scientific domains. These apps have the potential help OSC users complete their research and may have an impact outside of OSC if deployed at other institutions running OnDemand.

Comet Hunting

This project involves a real world application of finding comets in sun observation images from the SOHO (Solar and Heliospheric Observatory) spacecraft.

SOHO is a cooperative mission between the European Space Agency (ESA) and the National Aeronautics and Space Administration (NASA). SOHO studies the sun from deep down in its core out to 32 solar radii. The spacecraft orbits the L1 Lagrangian point. From this orbit, SOHO is able to observe the sun 24 hours a day. Even though SOHO's primary objectives relate to solar and heliospheric physics, the onboard LASCO instrument has become the most prolific comet discoverer in history!

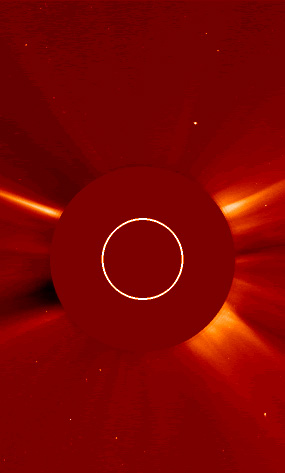

LASCO (Large Angle Spectrometric Coronagraph) is able to take images of the solar corona by blocking the light coming directly from the Sun with an occulter disk, creating an artificial eclipse within the instrument itself. LASCO images are automatically posted on the web approximately every 20 minute. Since LASCO began taking observations in January of 1996, the C2 and C3 coronagraphs have observed over 950 new comets and 9 known comets. The vast majority of these comets were discovered by amateur astronomers who closely examine the images for potential comets. Below is a typical image recently taken by SOHO.

This project will use the MATLAB software package to develop algorithms which can automatically analyze these images for potential comets. MATLAB is a high-level programming environment very popular with scientists and engineers because of its powerful toolboxes and easy to use scripting language. Basic algorithms from the image processing toolbox will be utilized to find comets using the following general steps:

- Load original images into MATLAB

- Process images to isolate all bright spots and eliminate glare due to solar ejections

- Compare spots in subsequent images to find potential comet trajectories

- Analyze trajectories to ensure they meet known characteristics

- Highlight possible comets in original images and create output movie

Machine Learning Movie Night

This project involves an exploration of IMDB movie reviews (50k) for supervised machine learning to predict positive or negative sentiment.

How do computers “learn”? How do they predict what movie you would enjoy? What are the internal representations, the “digital fingerprint” that makes this learning possible?

Natural language processing is a rapidly evolving field of computer science that uses human language as input and/or output and transforms human-authored content, like movie reviews, into a numerical representation appropriate for use with machine learning algorithms. New representations for text and speech are being developed constantly, with increasing sensitivity to context and expressive power.

Supervised machine learning makes associations between input feature representations and their labels. For example, a movie review could be encoded as a list of all the words it contains and training would help to learn weights associated with words, and how much each word contributes to a ‘positive’ or ‘negative’ sentiment label.

Participants will use Python and sklearn software to:

- Extract feature representations of text movie reviews, including Bag-of-Words, Averaged Word Vectors, Tf-idf, and N-grams

- Generate word clouds to visualize groups of documents and their characteristic words

- Run basic machine learning algorithms including Naïve Bayes and Random Forests

- Learn about training/test split for conducting machine learning experiments

- Evaluate feature choice and its impact on test results

Time permitting, participants will also use more advanced deep learning to predict new movies a user is likely to enjoy, given their past viewing habits.

Penetration Testing

Introduction

Project

Students will work through training exercises offered by hackthebox, designed to get them up to speed on the basics of penetration testing. The training exercises are hands-on as penetration testing requires one to be directly familiar with the systems under test.

Different penetration testing topics that will be covered, include but are not limited to:

- Nmap, a command line tool to gather network information about target systems.

- SQL injection basics

- Common privilege escalation methods

After students have completed the basics of penetration testing, they will be asked to review and discover vulnerabilities of information systems in an isolated environment. Finally, the group will create a writeup of their penetration testing discoveries, and present what was learned. The writeup will include the process of discovering the vulnerability, how the vulnerability was exploited, and steps to take to remediate the vulnerability.

Protein Folding

Project members will learn about proteins, why they are important, why protein shape and folding matters, traditional methods for identifying a protein's shape, and get an introduction to AlphaFold, which won the 2024 Nobel Prize in Chemistry. Students will then learn how to use Python and AlphaFold on the HPC systems to solve protein folding problems.

Previous SI Projects

View previous SI projects

- Lab-on-a-chip Nanofluidics

- Dark Mater in Galaxies

- Cancer Cell Migration, Invasion, and Metastasis

- Study of Chaotic Motions

- Space Frame Race Car Chassis Analysis

- Computational Chemistry

- Cryptanalysis

- Crystal Growth in a Computer

- Electron Scattering Project

- Reed-Frost Epidemic Model

- Fluid Dynamics Project

- Molecular Dynamics of the Bird Flu Virus

- Network Forensics

- Game Programming and Motion Capture Project

- Interactive Virtual Environments

- Mechanical Engineering Analysis Using the Finite Element Method

- N-Body Particle Dynamics

- Networking Design and Engineering

- Artificial Neural Network Project

- Parallel Processing Project

- First-Order Phase Transition

- Puzzle Image Processing Project

- Retro SI Programming

- Eclipsing Binary Stars

- Three-Dimensional Interactive Environments Using VRML

- The Effects of Visual Attention on Choice